This brilliant BBC Philharmonic branding is music to my…eyes?

Original Source: https://www.creativebloq.com/news/philharmonic-bbc

It’s a symphony of colour.

Original Source: https://www.creativebloq.com/news/philharmonic-bbc

It’s a symphony of colour.

Original Source: https://abduzeedo.com/kranus-health-branding-and-visual-identity

Kranus Health branding and visual identity

abduzeedo0804—22

Embacy team shared a branding, visual identity and web design project for Kranus Health, a medical startup which are rethinking male healthcare holistically and improving treatment options within the healthcare system for patients and doctors.

Credits

Producer: Asya Iljushechkina

Art Direction: Anastasia Galeeva, Elisey Soloviev, Nikita Sobolev

Project Manager: Denis Zatsepilin

Brand Designers: Nikita Gudkov, Anna Koval

Illustrator: Lera Belobragina

Production: Sasha Korshenyuk, Masha Roshka

For more information make sure to check out Embasy website or Behance page.

Original Source: https://www.hongkiat.com/blog/pipedrive-hubspot-crm/

Customer Relationship Management (CRM) software is essential for most businesses, big or small, to manage your leads and sales and keep track of your clients and their interactions with your company. However, it requires in-depth research to know which one would be ideal for the particular needs of your business.

To help you in deciding which CRM software to go with, let me attempt to give you a comparison between two of the best CRM tools available in the market today — Pipedrive and Hubspot — so you can make a well-informed decision and invest in the tool that suits you best.

Let’s have a look.

Top 10 CRM Software to Help You Grow Your Business

.no-js #ref-block-post-60270 .ref-block__thumbnail { background-image: url(“https://assets.hongkiat.com/uploads/thumbs/250×160/best-crm-software-grow-business.jpg”); }

Top 10 CRM Software to Help You Grow Your Business

For every business and organization, it is integral to be able to manage contacts and data effectively. One… Read more

What do you look for in a CRM software solution?

When you’re trying to decide which CRM software solution is best for your business, there are a few key features you should look for:

Ease of use: The best CRM solutions are user-friendly and easy to navigate. You don’t want to spend hours trying to learn how to use your CRM software.

Comprehensive features: The CRM software you choose should have all the features you need to manage your customer relationships effectively.

Affordable pricing: And, of course, you don’t want to overspend on your CRM solution. Look for a solution that offers a great value for the price.

Now that you know what to look for in a CRM software solution, let’s take a closer look at two of the most popular CRM solutions on the market today: Pipedrive and Hubspot.

Pipedrive, in a nutshell

Pipedrive is a cloud-based CRM software solution offering a wide range of features to help businesses manage customer relationships effectively. The software is designed to be user-friendly with a visual sales pipeline that helps users track their deals and progress through the sales process.

They are founded in 2010 by three Estonian entrepreneurs who were looking for a CRM solution that was easy to use and helped them close more deals.

The company is headquartered in New York City but has offices in Estonia, Portugal, and the United Kingdom.

HubSpot, in a nutshell

HubSpot, on the other hand, was founded in 2006 by two entrepreneurs who were looking for a better way to market their software company.

The company is headquartered in Cambridge, Massachusetts, and has offices in Dublin, Sydney, London, Berlin, Tokyo, and Singapore.

HubSpot is a comprehensive CRM software solution with a range of features to help businesses manage customer relationships effectively. The software is designed to help users track their sales and marketing performance and manage customer relations through a centralized platform.

HubSpot also offers a wide range of marketing and sales tools to help businesses close more deals and grow their customer base.

Key differences between Pipedrive and Hubspot

Pipedrive and HubSpot are two of the most popular CRM platforms in the market, each with its own set of advantages and disadvantages.

Here’s all you need to know about the major differences between Pipedrive and HubSpot so you can choose the ideal one for your business.

1. Ease of use

Both HubSpot and Pipedrive are pretty user-friendly. They both have an intuitive user interface that allows you to easily track your contacts, projects, tasks, leads, etc. They also have a wide range of email templates you can use or modify for your own purposes.

It’s easy to filter through all of your contacts in either software so that you can keep up with things like when they made their last purchase or if they are still using a specific product on their website.

There is a smooth learning curve other than figuring out where things are located on each platform, which is honestly a good thing as it means no training is necessary once you get started.

Verdict:

Hubspot and Pipedrive are both easy to use. You don’t need to learn anything new to use either program.

For easy of use:

HubSpot – 5/5

Pipedrive – 5/5

2. Automation

HubSpot is known for its automation capabilities. You can automate tasks like emailing customers when they abandon their shopping carts, sending out follow-up emails to potential customers, or creating workflows to keep track of your sales pipeline. HubSpot’s automation features are one of the main reasons why people choose it over other CRM platforms.

Pipedrive, on the other hand, does not have as many automation features built in. However, they do have extensive APIs that allow you to connect to third-party apps and services to automate tasks like sending out follow-up emails or creating workflows.

Verdict:

Hubspot offers more automation options than Pipedrive. However, Pipedrive has an extensive API that lets you connect to third-party apps and services to automate things.

In terms of automation:

HubSpot – 4/5

Pipedrive – 3/5

3. Reporting and analytics

HubSpot CRM has more than 150 free report templates. These include sales-related indicators like deals closed and monthly revenue, as well as marketing-related information like website traffic and social media engagements.

By subscribing to Sales Hub Enterprise or Sales Hub Professional, a user will have access to advanced analytics and custom reports, as well as the ability to create up to 10 CRM dashboards.

Pipedrive’s CRM also has a good selection of reports, with over 30 available templates. However, upgrading to a paid plan is necessary to unlock features like custom fields, which can be used to segment data and create more specific reports.

Additionally, the ability to export data into Excel or Google Sheets is a premium feature, as is access to the Pipeline Heatmap report.

Verdict:

Hubspot CRM provides a large number of free report templates (around 150), however, for advanced features such as custom fields, you need to upgrade to a paid plan. Pipedrive CRM, offers a smaller number of free report templates (around 30), but you can pay to use more of them.

In terms of reporting and analytics:

HubSpot – 4/5

Pipedrive – 4/5

4. Pricing

For HubSpot CRM Sales Hub, there is a free-for-life subscription with limited functionality. This can be upgraded at any time to one of three paid plans – Starter, Professional, or Enterprise.

Pipedrive’s CRM doesn’t offer a free-for-life subscription, but there is a 14-day trial period available for each of its subscription plans. Compared to HubSpot, Pipedrive’s prices are more economical – at around $20 less per user per month for the Enterprise plan.

All Pipedrive subscriptions come with an extensive feature set as standard, with the only main differences being the number of users and contacts that can be added to the account, as well as phone support.

Verdict:

HubSpot’s most expensive Sales Hub plan is almost double the price of Pipedrive’s Enterprise offering, making it one of the more costly CRM software solutions on the market.

The main differences between the paid plans of the two tools are the number of users, contacts, and automation workflows that can be added to the account.

When it comes to pricing and affordability:

HubSpot – 3/5

Pipedrive – 4/5

5. Core features

CRM software is used to manage customer relationships, sales, and marketing. As such, the core features of a CRM platform will usually include things like contact management, deal tracking, lead capture, and pipeline management. Both HubSpot and Pipedrive offer all of these features and more.

One area where HubSpot shines is in its marketing features. In addition to the core CRM functionality, HubSpot’s Sales Hub also includes features like email tracking, lead scoring, and website visitor tracking. This makes it a great option for businesses that want to get the most out of their CRM investment by using it as an all-in-one platform for sales and marketing.

Pipedrive, on the other hand, is more focused on sales. While it does have some marketing features, such as email integration and lead capture forms, they are not as comprehensive as what HubSpot has to offer. If you’re primarily looking for a CRM to manage your sales pipeline and deals, Pipedrive would be a good option for you.

Verdict:

Hubspot offers a lot of features for both sales and marketing, while Pipedrive focuses on sales.

On the core features:

HubSpot – 3.5/5

Pipedrive – 4/5

6. App integrations

HubSpot CRM is connected to the HubSpot App Marketplace, where there are currently over 500 app integrations. Categories include sales, marketing, productivity, finance, and customer service. And better yet, about half of these apps can be obtained for free.

Some examples of these free app integrations include DepositFix, Outreach, and Xero. The HubSpot App Marketplace can be accessed across all four levels of HubSpot CRM – including even in their free version.

Pipedrive also has a good selection of app integrations, with over 350 apps available in their App Store.

Like HubSpot, there is a healthy mix of both free and paid app integrations available in the tool. Categories include sales, marketing, productivity, customer service, and accounting.

Some popular app integrations that are available for Pipedrive include Google Calendar, Gmail, and Stripe.

Verdict:

Both HubSpot and Pipedrive offer a good selection of app integrations to extend the functionality of their CRM platforms.

However, HubSpot’s App Marketplace has a slight edge in terms of the number of apps available as well as the variety of categories covered.

Regarding app integrations:

HubSpot – 4/5

Pipedrive – 3.5/5

7. Customer Service

HubSpot’s customer service can be accessed 24/7 via phone, email, or live chat. The company also has an extensive Knowledge Base that includes how-to guides, troubleshooting articles, and product documentation. In addition, HubSpot offers a number of training resources, such as online courses, certification programs, and live events.

Pipedrive’s customer service can also be reached 24/7 via phone, email, or live chat. The company has a searchable Knowledge Base that contains how-to guides and troubleshooting articles. Pipedrive also offers a number of helpful training resources, such as webinars, guides, and live events.

Verdict:

Both HubSpot and Pipedrive offer excellent customer service, with multiple ways to get in touch with a support representative and plenty of helpful training resources.

On customer services:

HubSpot – 4/5

Pipedrive – 4/5

Conclusion

HubSpot CRM and Pipedrive are both great options for businesses looking for a sales or marketing-focused CRM solution.

HubSpot has the edge when it comes to marketing features, while Pipedrive is a better choice for businesses that want a more sales-oriented platform. Both platforms offer a good selection of app integrations and excellent customer service.

Which CRM you ultimately choose will depend on your specific business needs. If you’re not sure which one is right for you, we recommend trying out both platforms with their free versions to see which one works better for your team.

The post Pipedrive vs HubSpot: Which CRM is Better? appeared first on Hongkiat.

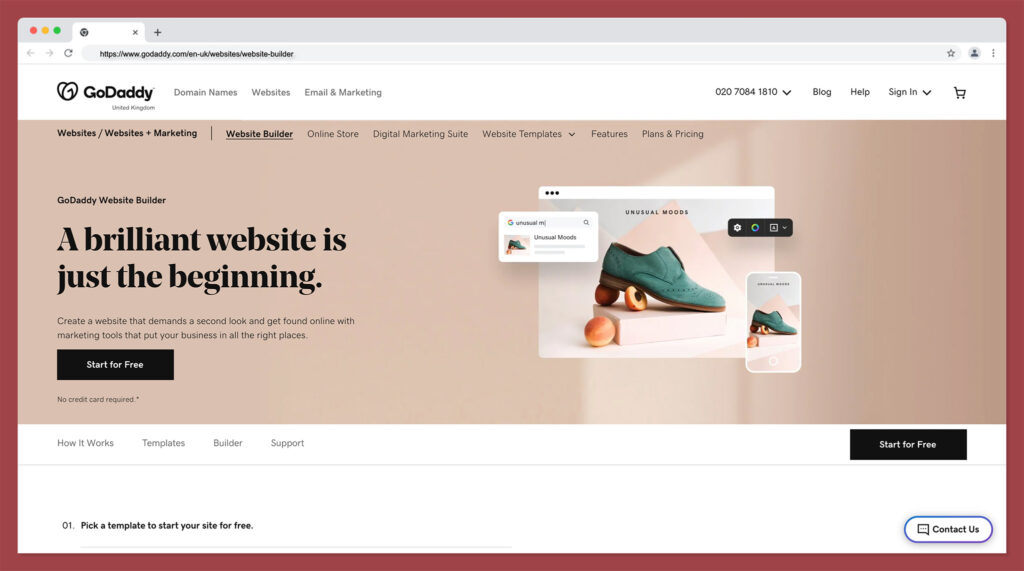

Original Source: https://1stwebdesigner.com/when-why-and-how-should-you-use-a-wysiwyg-editor-on-your-wordpress-site/

Have you ever felt perplexed about how to use WYSIWYG editors in WordPress? We’ll guide you through what WYSIWYG editors are, give you tips on using them, and show you an alternative to the built-in WYSIWYG editor for WordPress.

What is a WYSIWYG editor?

WYSIWYG stands for “what you see is what you get”. This means that whatever content is entered into the editor is shown exactly the way it will be displayed when it is published. It is similar to desktop publishing tools such as Microsoft Word, having similar formatting tools displayed on the toolbar at the top. WYSIWYG editors are usually integrated into various apps and websites. They can be used for content management, website building, messaging, and other different features. However they’re used, these special editors significantly save development time, reduce maintenance and manpower costs, and provide the ideal user interface and experience.

When should you use a WYSIWYG editor on WordPress?

In WordPress, the WYSIWYG editor is the primary input field for post and page content. Thus, whenever you create and edit content on WordPress, you use a WYSIWYG editor.

What are the benefits of using a WYSIWYG editor?

By using WYSIWYG editors, users won’t have to know much about HTML to manage and format their content. They’ll be able to write, insert images and other files, and perform rich text editing (and more) just by using the several buttons on the toolbar.

A modified version of TinyMCE, an open-source WYSIWYG editor, is built into WordPress. Its extensibility allows WordPress plugin and theme developers to add custom buttons to the visual editor’s toolbar . However, using a built-in editor often won’t be enough. There will be times when you will need faster and more sophisticated editors. As we will explain below, you can greatly improve upon the built-in editor by setting up an alternative. You can read more about how easy it is to do here.

How to extend the built-in WYSIWYG editor in WordPress

To display the full TinyMCE text editor so you have access to all of the advanced features available, add the following code to your functions.php file, enabling the hidden buttons:

function enable_more_buttons($buttons) {

$buttons[] = ‘fontselect’;

$buttons[] = ‘fontsizeselect’;

$buttons[] = ‘styleselect’;

$buttons[] = ‘backcolor’;

$buttons[] = ‘newdocument’;

$buttons[] = ‘cut’;

$buttons[] = ‘copy’;

$buttons[] = ‘charmap’;

$buttons[] = ‘hr’;

$buttons[] = ‘visualaid’;

return $buttons;

}

add_filter("mce_buttons_3", "enable_more_buttons");

An alternative WYSIWYG Editor

If the default editor is too limited for your project, it is possible to integrate an alternative WYSIWYG editor to allow for more complex editing for your users. One of the top alternatives is this beautiful JavaScript web editor from Froala that has a lot to offer for developers and users alike. Its easy integration, rich features, customizability, and informative yet organized documentation let developers have an easier production time. Moreover, it gives users a better editing experience because of its cleanliness, speed, and intuitiveness. With a flat interface, SVG icons, buttons, dropdowns, and popups, every detail of its design is amazing. It has full mobile support, with popup formatting controls that appear the same on both mobile devices and desktops and image and video resizing.

Froala is easy to customize, fully scalable, and fast, loading in less than 40 milliseconds. Compared to TinyMCE’s 15 integration guides, Froala provides 17 framework libraries and is the first editor to provide SDK libraries for 6 different servers. Its intuitive interface also accommodates 100+ features without overwhelming users with a complex and complicated GUI. In addition, Froala has easy-to-follow documentation and easily integrates with your products.

Migrating to Froala

It only takes a few steps to replace your standard WordPress WYSIWYG with the robust Froala:

Download or clone the wordpress-froala-wysiwyg-master plugin.

Under the plugins folder of your WordPress installation, make a new folder and paste the contents of the downloaded file there.

In your WordPress admin area, go to plugins and click “add new”. Search for “Froala WYSIWYG Editor” and follow the automated process.

On the plugins page of your admin area, activate the Froala plugin. This will now replace WordPress’ default editor.

Once you’ve completed these steps, you’re all set to use a powerful, faster, and cleaner editor. To learn more about integrating and using Froala with WordPress, check this page out.

If your project requires more extensive features than what the built-in WordPress editor offers, Froala is definitely a great option to explore. Read more about Froala here.

Will you upgrade or extend your current WordPress WYSIWYG editor?

Based on the information we’ve covered in this article, it is obvious that you can do so much more with your WordPress WYSIWYG editor than how it comes out of the box, so to speak. For instance, you may choose to extend the TinyMCE editor to give your users even more control over the formatting of their content. Better yet, you can choose to go even further and upgrade your WYSIWYG editor to a more powerful one altogether. If you follow what we’ve discussed in this article, you will definitely take WordPress content entry and editing to a better place for your users.

Original Source: https://designrfix.com/reviews/cisco-phone-accessories

The cisco phone accessories has become more than just the workhorses they’ve been in the past. Choosing just one can be difficult with so many available styles, colors, and designs. To make your choice easier, we’ve broken down the top 10 best overall cisco phone accessories based on cost, consumer reviews, warranty, and other important … Read more

Original Source: https://www.creativebloq.com/news/sims-4-update

Ageing! Death! Incest!

Original Source: https://abduzeedo.com/futurism-inspired-packaging-design-bella-ciao-craft-beer

Futurism inspired packaging design for Bella Ciao Craft Beer

abduzeedo0726—22

Marçal Prats shared a packaging design for Bella Ciao, a lager craft beer that pays homage to the famous libertarian anthem, a call for universal struggle against all political and social oppression.

The main purpose of the design is to express the power of the protest, using a strong combination with a striking contrast between the colors red, black, and white. In addition, the style depicts the vibrant, expressive, and even lo-fi quality finishes of the underground political print materials of the 1970s.

The design evokes the Italian origin of the song, using images taken from pamphlets of social protests in Rome during the 1970s, while the ground-breaking Futurism work by the Italian artist Fortunato Depero inspires the logotype and typefaces.

Inspiration – Italian Futurism & 1970’s Social Demonstrations

Packaging design

Credits

Packaging, Beverages, F&B, Branding, Typography

Location: Tarragona

Bella Ciao craft beer can be purchased at the l’Anjub Store.

For more information about Marçal make sure to check out:

Behance

Website

Original Source: https://abduzeedo.com/panchita-short-film-charity

Panchita! Short film for charity

abduzeedo0727—22

Unsaid Studio is a Design & Motion Company in New York City with roots in Brazil and the UK working on a short film now in production, Panchita!, that is inspired by the true story of a girl looking to find her own stable foundations and follow her dreams. A film made with love and for a good cause.

Client

200 million people still live in poverty in South America… TECHO is an NGO that makes a tangible difference. In the last 25 years, they have built homes for 131,000 families in 18 countries and counting.

Reason for undertaking

To help those in Need. To help drive change, raise awareness and support TECHO via a gofundme.

To respond to an inspirational true story: “Mama we have a floor! We have a floor!” From the moment a volunteer described the joy of seeing a 6-year-old girl dance in her new Techo house for the first time, our short story began to fall into place. Upon unearthing a magical old VCR, Panchita discovers her calling – to tap dance.Guided by an unexpected, supernatural friend and mentor, a new passion transforms her very surroundings.

Give something back with our art. Personally as artists we wanted to combat the growing feeling our space in digital art is becoming less permanent, less meaningful and more greed driven. Proud to be empowering south american artists in the process.

Challenges

What creative and technical challenges were involved and how did you solve them?

Establishing the the look of the film was a balance, Favelas are generally not nice places to set a film in or very inspiring,. Through Panchita’s eyes, it’s a wide new world full of tropical colors and simple, innocent shapes. The film needed to inspire people to make change and uplift. Techo empowers and is something to be optimistic about; it’s a tale of hope and triumph against adversity. We settled on an illustrative, almost toy-like miniature visual style that appealed to all ages.

The Sand

Within the favela, there is no space free from the elements, the very ground is unforgiving and stifles Panchita’s dreams. The sand, a key member of our cast, visually and technically was a challenging balance. It needed to move realistically, feel miniature and stay consistent with our world of simplified shapes.

Miniature Toylike Set

When you donate, TECHO enables you to reach into a favela and affect someone’s life in a physical way. We wanted our toy-like set to make it easy to imagine placing a new model home into the scene with your own hands.

Credits

Doug Bello – Director / Executive Producer / Story

Tom Alex Buch – Art Director / Creative Director

Jonathan Souza – Animation Director

Luciano “The Ear” Nader – Modeler

Pablo Porto – Lookdev / Render

Allan Foxlau – Designer / Art Director

Liza Domingues – Art Director

Mayumi Kimura – Character Designer

Rodrigo Rodrigues – Technical Director / Rig

Joanna Vieira – Costume Designer

Ariane Pelissoni – Modeler

Keka Petrich – Producer

Sara Félix – Producer / VO artist

Rodrigo Lescano – CG Generalist

Bruno Borges – Consultant

Jarbas Agnelli – Creative Consultant

Andressa Paccini – Producer

Fernando Rodrigues – Writer

Arthur Azevedo – Modeling

Maurício Nader – Soundtrack

Aimée Ueda – Animator

Anna Julia Queiroz – Animator

Bruno Fabian – Animator

Gustavo Oes – Animator

Fabricio Luiz – Animator

Jonas Silva – Animator

Jorge Amorim – Animator

Guilherme Peixoto – Animator

Angy Garzon – Designer

Ana Testa – Marketing director

Orlando Souza – Architectural Adviser

For more information check out unsaidstudio.com

Original Source: https://abduzeedo.com/using-design-thinking-create-ideas-better-meet-customers-needs-and-desires

Using design thinking to create ideas that better meet customers’ needs and desires

abduzeedo0726—22

We usually post about design inspiration and we tend to focus on the visual side of things. We also understand that design is form plus function in harmony. So for this post we’d love to share a UX design case study highlighting the design thinking framework of empathize, define, ideate, prototype/test, learn and iterate. This project was shared by Alex Gilev and it is quite helpful for those thinking about venturing to the UX design field.

1. Empathize

Define Key Personas.

Understand Pains & Gains.

2. Define

Define the problem.

Establish OKRs.

Refine Information Architecture (IA).

3. Ideate

Divergent thinking.

Pushing the envelope.

4. Prototype

Test hypotheses in low-fidelity.

Refine in high-fidelity.

5. Learn & Iterate

Establish the Design Framework.

Create the Design Prototype.

For more information make sure to check out Alex Gilev on:

Dribbble

Website

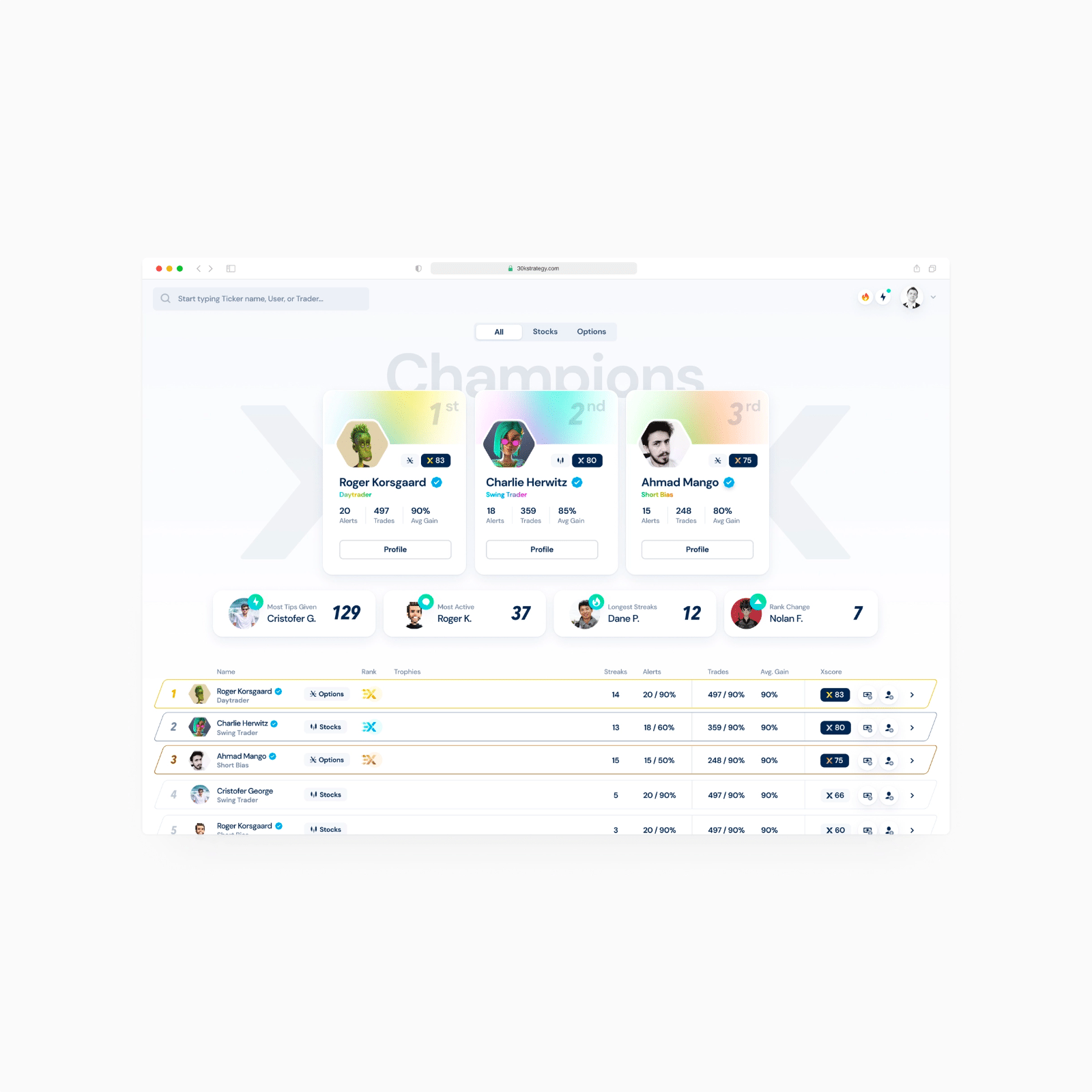

Original Source: https://ecommerce-platforms.com/articles/squarespace-vs-godaddy

GoDaddy vs Squarespace: Which tool should you be using to build your website?

These days, there are plenty of great options out there for business owners looking to develop their own site. Services like Squarespace and GoDaddy prove you don’t need a huge amount of developer knowledge or a massive budget to begin growing online.

However, while both of these tools will help you build a website, they’re intended for very different audiences. While GoDaddy helps smaller businesses make their first “online presence” with a user-friendly design assistant, Squarespace’s stunning templates are more suited to portfolio creation and those in search of impressive visual appeal.

Today, we’re going to take a closer look at what GoDaddy and Squarespace can do, and how you can make the right choice for your business.

GoDaddy vs Squarespace: An Introduction

As mentioned above, both Squarespace and GoDaddy offer website building tools for today’s online business owners. GoDaddy didn’t start life as a website building solution. Instead, the company initially focused on selling domain names to a huge range of customers worldwide.

As the online landscape continued to evolve and more domain companies appeared on the market, GoDaddy, released its website builder as a way of becoming more competitive (and valuable). You can even sell products with the websites you create via GoDaddy.

Squarespace has always been a website builder. The solution focuses on visual appeal first, with a huge range of stunning, award-winning, and professional templates to help you stand out online. With Squarespace, you can build anything from a compelling portfolio to a blog or store.

While GoDaddy is ideal if you want to create your website and go live as quickly as possible, with it’s convenient ADI setup and it’s easy-to-use interface, Squarespace takes a different approach. If you’re a little more creative and don’t mind spending a little more time customizing and optimizing your website, Squarespace may be the right pick for you.

Go to the top

GoDaddy vs Squarespace: Pros and Cons

If you want to decide between your two website builders as quickly as possible, few things are more enlightening than a quick pros and cons list. Let’s see where GoDaddy and Squarespace excel, and where they fall short.

GoDaddy Pros and Cons

Pros 👍

Cons 👎

Pros 👍

Very easy to use environment with ADI functionality

Switch themes whenever you choose with automatic reformatting for content

Easy to test with a free version, so you can decide if the site builder is right for you

Appointment management, online selling, and other features available

Trusted hosting and domain names included with your site builder

Mobile app for editing your content anywhere

24/7 customer support

Cons 👎

Very limited on customization options

Not much creative freedom for website builders

Not as visually appealing as other website builders

Squarespace Pros and Cons

Pros 👍

Cons 👎

Pros 👍

Lots of customization options for your website or store

Appointment booking, online selling, and subscription selling

Blogging and marketing features to help you stand out online

Award-winning themes and templates for design

Excellent customer support

Relatively easy to use environment for most beginners

Flexibility for growing brands

Cons 👎

Pricing plans can be a lot more complex

No AI solution for helping you to build your website

Not the most advanced for ecommerce

Go to the top

GoDaddy vs Squarespace: Core Features

Both Squarespace and GoDaddy will give business leaders the basic functionality they need to build an online website or store. However, there are some major differences in the experience you’re going to get from each solution.

Themes and Editors

Making your website look incredible is probably one of the first steps you’ll take when designing an online presence. GoDaddy has a reasonable selection of themes to choose from, with around 22 categories, and 100 design variants overall. There’s a good chance you’ll find something suited to your company, though the overall appearance may seem a bit plain at times.

GoDaddy’s themes are based on your industry, so it’s easier to find something which seems reasonably relevant to your needs. Plus, each theme comes with stock images included, or you can upload your own visual content if you prefer.

All of the designs are mobile responsive, and there are hundreds of pre-made sections in each template for you to customize. You can add your own content, including videos, implement HTML and more. As an added bonus, if you want to change your theme, you can do so at any time and your content will adapt automatically, so you don’t have to start from scratch.

GoDaddy also offers ADI, or Artificial Design Intelligence, to assist you in designing your website. You answer a series of questions about your business, and the system creates a design based on your answers, so you start with a fantastic and relevant template.

While GoDaddy is reasonably impressive from a design perspective, it can’t compete with Squarespace. Widely regarded one of the best options for website design out there based on template options alone, Squarespace themes are beautifully crafted and very professional.

While GoDaddy uses artificial intelligence to do most of the hard work for you, Squarespace places you in the driving seat. You start by choosing from a wide selection of themes, expertly chosen by design pros. You then populate the theme with your own content, and make adjustments.

Not only do Squarespace’s themes look phenomenal – they’re actually created by professional designers – but they’re very flexible in terms of what you can edit too. Squarespace gives businesses a level of customization difficult to match elsewhere. If you do feel overwhelmed by all your options, the “Design Hero” service will help you to choose the best elements for your website.

Like with GoDaddy, everything you create will be responsive, and you can adapt your theme to suit the kind of industry you’re in, as well as the type of site you want to build.

Ecommerce features

You can build a range of different types of websites with both GoDaddy and Squarespace. Depending on the theme you choose, and the functionality you implement, you’re free to experiment with your own portfolio, blog, or even a membership site. However, perhaps most importantly, you can also create an ecommerce store too.

Both Squarespace and GoDaddy have a handful of ecommerce features in common, such as:

Payment processing: You can support PayPal, Square, Stripe, and other processors.SSL Security: Both sites protect customer transactions with encryptionAbandoned cart recovery: You can remind customers to checkout after they leave.Promotion and discount codes to improve salesSyncing with Instagram and other tools to boost sales

GoDaddy does have some handy inventory management and store management tools as well. You can keep track of stock across multiple channels, and even allow customers to place orders when you’re out of stock, by creating and managing backorders.

However, you can’t sell offline with GoDaddy (at the moment). Squarespace has a Square integration for taking card payments offline and syncing everything with your online store. This isn’t something you get when you’re using GoDaddy.

SquareSpace also allows you to sell digital products as well as physical ones, such as online courses and software downloads, whereas GoDaddy focuses exclusively on physical products. You can also create promotional pop-ups with SquareSpace which can boost your chances of earning a sale.

Blogging and marketing

If you’re keen to boost your presence online, you’re going to need basic blogging and advertising tools. GoDaddy is quite limited in this regard. From a blog perspective, you can separate your posts into categories, embed RSS feeds, and track performance through analytics. However, there aren’t any extensive SEO tools to help you boost your presence with the search engines.

With Squarespace, you get a much more extensive blogging experience. You can archive posts, create social bookmarks, and add search features to help customers find what they’re looking for. There are even some handy SEO tools, although Squarespace isn’t the best on the market in this regard.

In fact, both GoDaddy and Squarespace could be a little better when it comes to search engine optimization. You can submit a sitemap to Google with Squarespace, which is helpful, but the process of optimizing your content can be a little complex. On a basic level, both tools allow you to change URL slugs, meta titles and descriptions, and add image alt text.

From a marketing perspective, both GoDaddy and Squarespace support custom email addresses through G-Suite, and there’s a built-in email service from GoDaddy you can leverage for an extra cost. You can run email marketing campaigns through both tools too. SquareSpace has its own “SquareSpace Email Campaigns” service with professional templates.

Alternatively, you can integrate your store with Mailchimp, or another email marketing app from the Squarespace Extensions store. Outside of email, the other marketing opportunities are pretty similar. Both GoDaddy and Squarespace allow you to link to various social media accounts, like Twitter, Facebook, and Instagram.

GoDaddy takes things a step further with a live feed section and post creator. There’s a YouTube livestream option to share what you’re doing with website visitors, which is ideal for boosting site traffic. On the other hand, Squarespace has a “social shares” function so customers can post your content direct to their own social feed in a couple of clicks.

Squarespace also has apps like “Unfold” in the Squarespace app market which assist in creating social media posts to match your unique brand image.

Go to the top

Squarespace vs GoDaddy: Help and Support

We all need a little help sometimes. Even with the best website builder from Wix, WordPress, or Shopify, you’d still need to reach out for help from time to time. Both Squarespace and the GoDaddy website builder come with some support solutions.

24/7 support is available from both channels, to ensure ease of use for customers, and there are live chat options on offer too. You also get a range of help articles from both vendors, so you can find guidance on things like how to design a custom domain, or use the drag-and-drop builder.

Squarespace is a little more in-depth than GoDaddy from a support perspective, with 24/7 email support, and chat options. GoDaddy has phone support, which you don’t get from Squarespace, but this might not be a sticking point for today’s customers.

For a small business, the Squarespace articles about web design and marketing tools are a little easier to follow than GoDaddy’s pieces on web hosting bandwidth and website creation.

Go to the top

GoDaddy vs Squarespace: Pricing

Price should never be the only factor you consider when choosing a website builder, but it’s still an important concept. Squarespace plans are a significant amount more expensive than GoDaddy’s.

With GoDaddy, you get a web hosting service, domain registrar, and site builder in one, with prices ranging from $6.99 to $29.99 per month. All packages come with the same themes and support. However, if you’re running a business, you should probably start with at least the “Premium” plan.

The $14.99 Premium plan comes with extra social sharing tools, SEO guidance and the option to accept bookings and payments, which you can’t do on the personal plan. The commerce plans allow you to sell a wide range of products online too.

Squarespace has higher prices, and a bigger learning curve, but it’s also a little more versatile. Prices range from $12 to $40 per month, with two cheaper plans for individuals or companies who aren’t looking to sell online. The more expensive plans allow you to access online selling, and leverage extra features like affiliate commissions for partners, and Instagram shopping.

SSL security is also included on every business plan with Squarespace, which isn’t the case with GoDaddy, so make sure you watch out for what you really get from a basic plan. There’s also no free plan available for either package, though you can start building a website with GoDaddy for free (without taking it online).

Free custom domain offers are available with both Squarespace and GoDaddy plans, and there are various discounts offered throughout the year. Don’t forget you’ll need to account for a transaction fee when you’re taking payments online too.

Go to the top

GoDaddy vs Squarespace: Conclusion

Both the GoDaddy ecommerce site builder and the Squarespace website builder have a lot to offer for beginners in the online selling space. In a side by side comparison, it’s fair to say there are some major differences. GoDaddy is a hosting company with fantastic ease of use, lots of tutorials and guidance for beginners, and assistance from AI.

Squarespace, on the other hand, allows you to build a much more advanced website with custom, premium templates, and a fantastic range of customization options. All of Squarespace’s plans come with SSL certificates, excellent bonus features, and the option to expand your site functionality with available add-ons.

Our advice is if you’re looking for simplicity above anything else, it’s probably best to stick with something like GoDaddy as your site building and hosting company. If you’re looking for more freedom and design flexibility, switch to Squarespace.

The post The Ultimate Squarespace vs GoDaddy Website Builder Comparison appeared first on Ecommerce Platforms.