The Best Logo Maker for Your Brand Design

Original Source: https://ecommerce-platforms.com/articles/best-logo-maker

The best logo maker can be an excellent tool for new businesses hoping to develop a memorable image. After all, your logo is more than just a crucial part of your website design, it’s how your customers identify your business, and how you differentiate yourself from the competition.

A basic logo maker, or logo generator might not be able to give you the full creativity and scope you’d get from a professional designer or graphic artist. However, it can offer a fantastic starting point when you’re struggling to find your ideal image.

Logo makers offer quick and convenient solutions for bringing your brand to life, often enhanced by artificial intelligence, and endless customization features. They can even act as a source of inspiration when you’re searching for ideas to give your designer.

Today, we’re going to look at some of the top logo makers on the market, chosen for their versatility, feature-richness, and customization capabilities.

What are the Best Logo Makers?

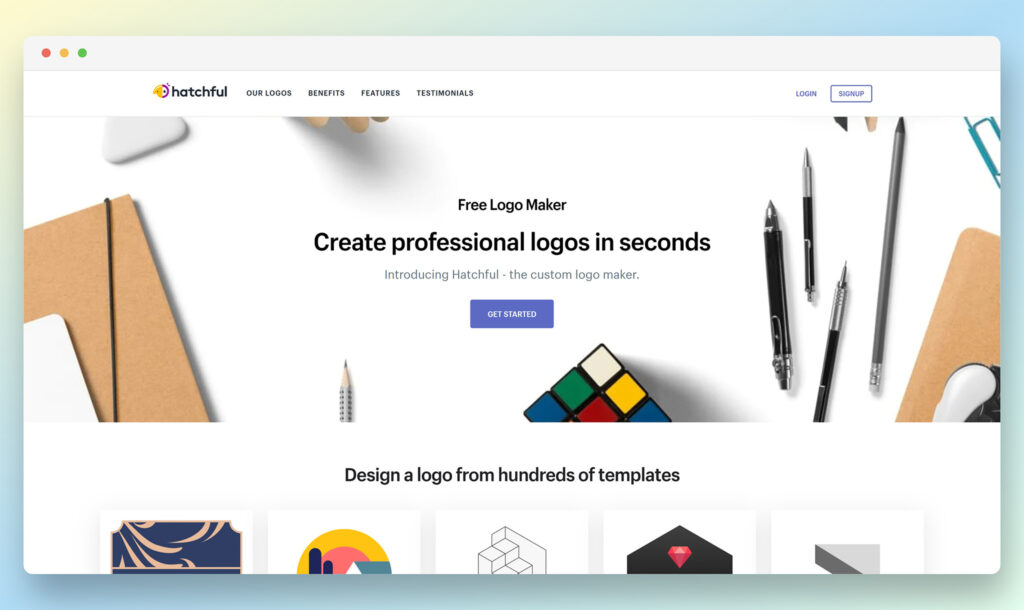

Hatchful (by Shopify)

Hatchful is the state-of-the-art logo creator produced by the team at Shopify, the world’s leading ecommerce site builder. This convenient online logo maker promises a quick, simple, and affordable way to design a custom logo in seconds, with minimal effort. You can choose from hundreds of templates, and experiment with designs specially chosen for different industries.

The high-resolution logo maker works on Android and iOS, and has already created logos for more than 140,000 business owners. Outside of the various templates available to get you started, you also get a range of fantastic customization features. You can adjust your icons, fonts, and color combinations within a straightforward design studio.

As an added bonus, Hatchful allows you to download assets for your social media sites, as well as your website, alongside business card designs, and even images for your merchandise. Features of the Hatchful service include:

Dedicated mobile appsWide range of designs by industryFull brand packages for social mediaHundreds of pre-designed templatesIntuitive design studio

Pricing

It’s free to create a logo with Hatchful, even if you don’t have an existing Shopify account. However, there are some limitations on what you can get for free. If you want to download your logos, you’ll need to pay for each file you access. You’ll also need to pay extra for premium logo templates and features within the app.

Pros 👍

Cons 👎

Pros 👍

Fast-paced and convenient logo design

Great for experimenting with brand designs

Free social media assets optimized for online channels

High resolution logo downloads available

Convenient range of industry templates

Cons 👎

Extra fees for premium templates

Some limitations on custom assets

Go to the top

Wix Logo Maker

The Wix Logo Maker tool was designed by the company at Wix to provide business users with an easier way to launch their own brand journey online. You will need to sign up for an account with Wix to get started, but once you do, the full process is extremely straightforward. Wix leverages the power of AI to help guide you towards the correct design.

The system will ask you what kind of styles you prefer, what industry you’re involved with, and more, to ensure you get the most relevant logo for your needs. There are tons of ways to explore different assets by category and industry. What makes Wix particularly impressive is the customization available for your design elements.

There’s a comprehensive design tool where you can edit your DIY logo by adjusting shape, style, opaqueness, and even color combinations. You’ll also be able to see how your logo might look on different backgrounds. There’s even the option to download a low-res file formats for free. Features include:

AI guidance for choosing your logoBusiness card and social media asset creationMock-ups for your branded merchandiseLogo design inspiration and adviceVarious download files

Pricing

While free logo design is available, your logo options will be limited. You will need to pay to access your logo in a range of higher-quality file types. The free option has a very low resolution, so it won’t do much for a growing brand. You can start by downloading your logo from around $20 for full commercial rights and standard files. Upgrading to the Advanced package at $50 gives you higher resolution.

Pros 👍

Cons 👎

Pros 👍

Useful AI guidance for beginners

Lots of customization options for your logos

Help with mocking up different merch designs

Various industries and themes to choose from

Easily add your logo to business cards and websites

Cons 👎

Can be expensive for high-res files

Requires an account sign-up.

Go to the top

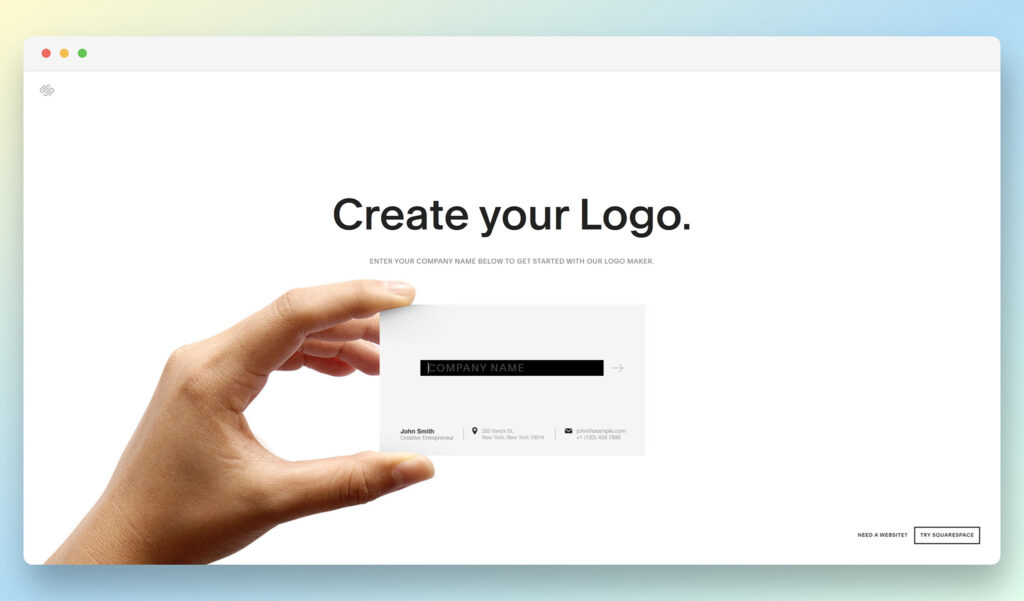

Squarespace Logo Maker

Squarespace is another well-known website builder which can also support companies in creating custom logos. The easy-to-use designer makes it simple to sort through available templates according to your industry. You can even download a version of your logo for free, provided you already have a Squarespace account.

Squarespace has a range of customizable fonts, colors, and icons to choose from. You can play around with the composition of your logo, and produce different variations to see which appeal most to your brand shareholders. All you need to do to get started is enter the company name. After that, you’ll get the option to seek out icons and designs based on keywords.

You can adjust the color palette of your images as you go, and move the positioning of different icons. There’s even guidance from Squarespace to help ensure your logo makes the right impact. You don’t have to sign up until you’re ready to download either. Features include:

Wide variety of pre-existing iconsCustomize colors, fonts, and icon positionsEasy to use environment with customer supportHigh-resolution downloads in greyscale and colorFree access for Squarespace account holders

Pricing

As mentioned above, the Squarespace Logo Maker is free to use for logo creation, but you won’t be able to download the design until you’re ready to sign up for a Squarespace account. Squarespace starts at $16 per month for the basic account, if you want to build a portfolio website or blog.

Pros 👍

Cons 👎

Pros 👍

Wide selection of logo designs and customizations

Easy-to-use ecosystem

Great support from the Squarespace team

Free access for Squarespace users

Lots of freedom for positioning icons

Cons 👎

Limited icon options

Does require a Squarespace account

Go to the top

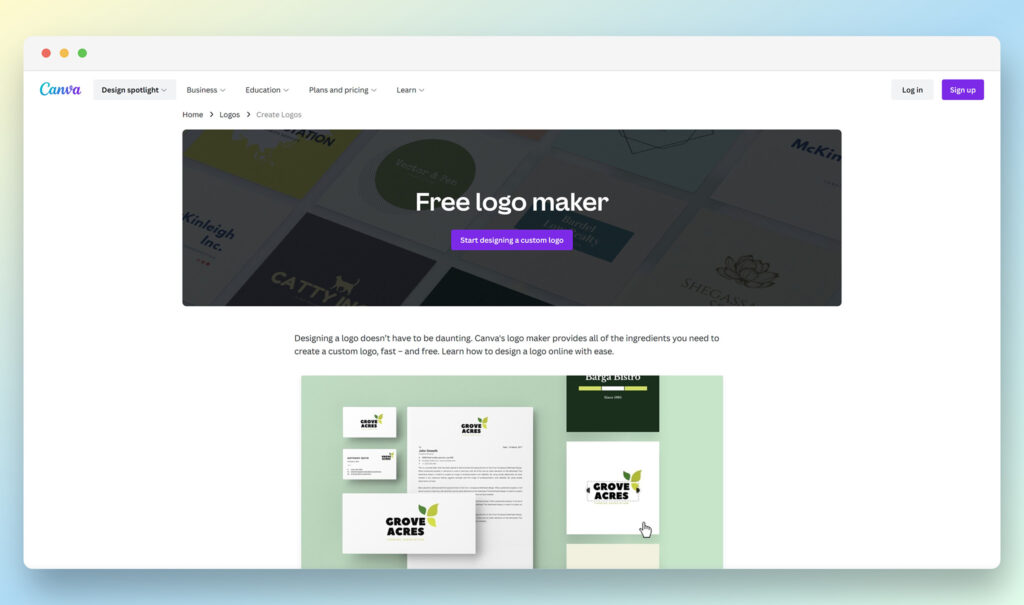

Canva Logo Maker

Widely regarded as one of the most popular tools on the market for simple graphic design, Canva makes it easy to create a host of social media posts, graphics, and visuals for your online presence. The Canva logo maker is specifically designed to make creating a beautiful new logo as quick and simple as possible.

All you need to do is launch the Canva app and use the “logo” option to browse through a library of professional-looking templates, built for modern businesses. There are various industry options available, to ensure you get something specific to your audience. Once you’ve chosen a template, you can tweak everything from color combinations to fonts.

The drag and drop tool also ensures you can combine various icons to make your own templates from scratch. You can experiment with photo filters and image flip, and even add animations to your logos to make them more eye-catching. Features include:

Wide range of color, image, and background choicesConvenient drag-and-drop builderDownloads for PNG, JPEG, and PDFAnimation functionality availableMock-up generator for adding your logo to merch

Pricing

You can get started with the Canva logo maker for free, but you’ll be limited in the number of customizations you’ll have. Upgrading to a premium package will give you more access to animations, unique templates, and the ability to download a transparent version of your logo, which means you’ll have more freedom for where you can position it. Plans start at $12.99 per month.

Pros 👍

Cons 👎

Pros 👍

Excellent range of customization options

Fantastic templates and icons for beginners

Excellent animations for videos

Mock-up generator included

Lots of download options

Cons 👎

Can take some time to get used to the features

Premium packages have the best capabilities

Go to the top

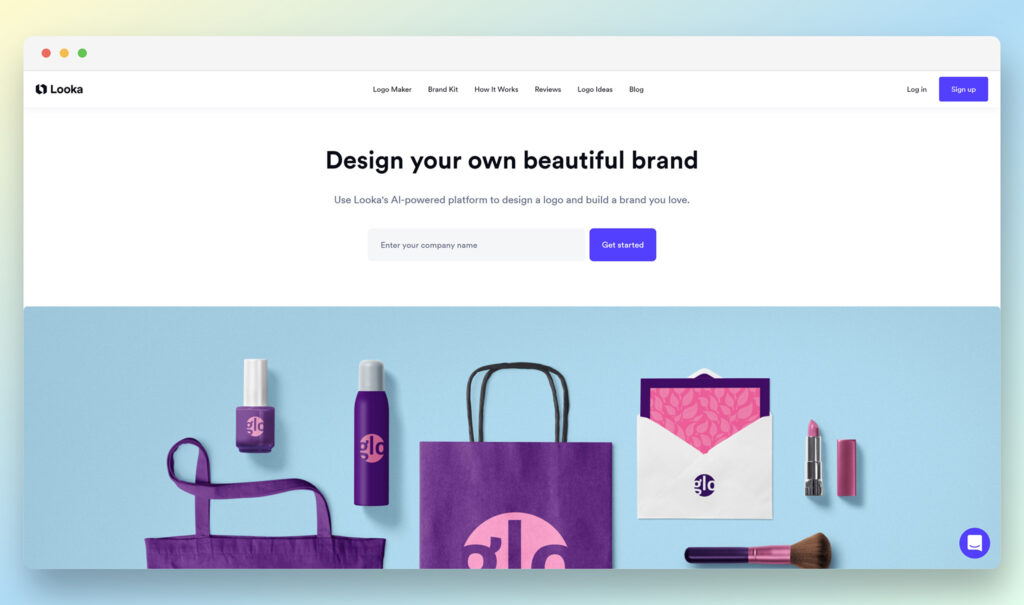

Looka

When it comes to quick and simple logo design, Looka is one of the better-known tools on the market. The company offers users a convenient AI platform where they can design and build eye-catching images for their brand, and even add logos to various pieces of merchandise.

Using Looka is extremely straightforward. The AI system will ask you a few questions about your business and branding requirements. From there, you can explore potential logo suggestions intended to represent your company perfectly. The customization options allow you to adjust brand colors, fonts, and icons. Once you’re done, you can mock-up a range of brochures and business cards.

Looka supports the development of everything from basic logo designs, to comprehensive logo files in a range of resolution types. You can create full brand kits and social media templates too. Some of the features include:

Black and white, color, and transparent logosWide selection of different file options20 asset types for mock-ups, including invoices and email signaturesPerfectly optimized social media assetsBusiness card designs and templatesBrand kit creation

Pricing

There are a few ways to purchase a perfect logo from Looka. The first option is to simply buy one of your logos in the form of a PNG file, for £15. After that, you can upgrade to a one time purchase of a logo in multiple file types for £50. Alternatively, you can access full branded subscription kits for £84 a year, or £156 per year if you want to build a website too.

Pros 👍

Cons 👎

Pros 👍

Excellent range of high-resolution files

Social profiles and posts

Business cards and email signatures

Convenient AI solutions for logo inspiration

Easy to use design exprience

Cons 👎

No free download option

Can be expensive for some extra features

Go to the top

UCraft

Unlike some of the other logo makers mentioned in this list, UCraft doesn’t leverage AI to help you make your logo, or give you a host of templates to choose from. Instead, the solution gives you more freedom to design something from scratch.

This is an excellent tool for people in search of a more comprehensive design system for building the ideal logo. You can start by choosing icons suitable to your brand and add them to your own custom canvas. From there, you’ll be able to add text elements, brand names and slogans.

You can customize the text, change the color and size of the font, add your own shapes and more. There’s even a “Preview” option so you can see what your content is going to look like. Everything is wonderfully easy to use and straightforward. The user-friendly design software is great for entrepreneurs with no experience in professional logo design. Features include:

Downloads in .PNG and .SVGConvenient range of icons to choose fromCustomization system with drag-and-drop functionalityLots of colors and iconsExtra landing page, blog, and store creators

Pricing

The pricing for UCraft gives you access to the full tool, which you’ll use to create websites, blogs, and a range of other solutions, like landing pages. The pricing options start with a free service which includes access to a multi-page website and a high-res logo file. After that, pricing begins at $10 per month.

Pros 👍

Cons 👎

Pros 👍

Lots of customization options

Easy-to-use environment

Extra free assets available for designers

Free package available

Small learning curve

Cons 👎

No AI or extensive templates

Can be confusing at first

Go to the top

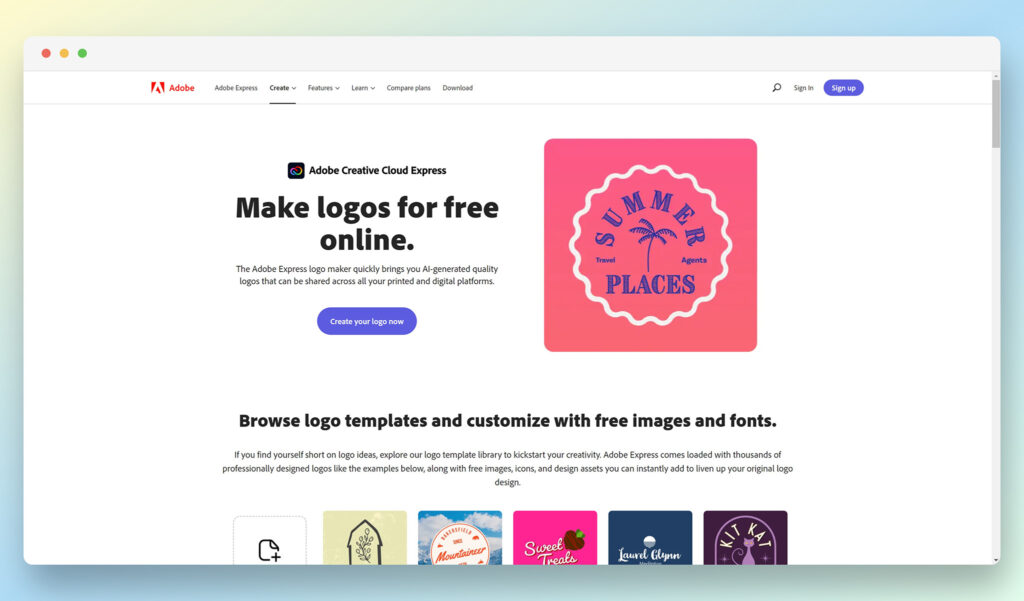

Adobe Logo Maker

Created by the experts at Adobe, responsible for tools like Photoshop and Lightroom, Adobe Express logo maker allows you to create attractive logos online for free. The Adobe Express logo maker tool rapidly produces a host of high-quality branded assets you can use across all digital and printed platforms, and you don’t need a credit card to sign up.

Adobe Express gives users plenty of templates and font options to choose from. When you begin using the service, you’ll start with a few questions from an AI system. You’ll need to provide information on your business name, theme, and business category, so the service can suggest potential design suitable for your organization.

The customization options with Adobe Express might not be as advanced as you’d get elsewhere, but you can rest assured all of the logo designs will look clean and professional. You can build your own templates, and download a 500px JPG or PNG file for free too. Features include:

AI logo design generationWide range of themed icons and templatesAnimated logos and videos availableStep-by-step guidanceSocial media logos

Pricing

You can use the basic version of the Adobe Express logo maker for free, which comes with a range of templates and themes to choose from, as well as customization options. If you want to download more high-resolution versions of your logo, or you’d like more advanced editing features, you can spend $9.99 per month on the premium package.

Pros 👍

Cons 👎

Pros 👍

Animated logo videos and gifs

Various social media optimized assets

AI support to help with logo generation

Easy-to-use interface for customization

Lots of support for business cards and brochures

Cons 👎

Best features reserved for the premium package

Not a lot of customization options

Go to the top

Create a Quality Logo Online

Today, there are plenty of great tools for creating vectors and simple logos online with minimal design knowledge. All of the options above will help you to create a beautiful logo in as little time as possible, particularly if you don’t have the cash to buy a great logo from a designer.

However, you’ll need to be careful when choosing your ideal service. Not all solutions will give you the opportunity to download your logo ideas with a transparent background, or in a high-resolution file. Some products are also limited when it comes to creating a unique logo for your small business.

The fewer icons and color options you have to choose from, the more commonplace your logo will look. While no online design experience will give you the same quality as having your own logo made by a professional, finding a free logo maker with more flexibility will at least allow you to create a simple and effective design for your small business.

Once you have the vector files you’ve created online, you can always work with a designer to take your logo to the next level when you have a bigger budget.

The post The Best Logo Maker for Your Brand Design appeared first on Ecommerce Platforms.