A Showcase Of Lovely Little Websites

Original Source: https://smashingmagazine.com/2021/11/showcase-lovely-little-websites/

A map that blends past and present, a musical time machine bringing back distant memories, or an interactive graphic novel pulling you deeper and deeper into a powerful story — sometimes you come across a lovely little website that, well, instantly conquers your heart. It doesn’t necessarily have to be overly useful or practical. Instead, its true value shines in the experience you get from it. It might leave you with your jaw dropped, with a smile on your face, surprised, excited, or inspired.

In this post, we collected lovely little sites like these, found in the remote corners of the web. They are perfect for a short coffee break or whenever you’re up for a little bit of diversion. We hope you’ll enjoy them. Oh, and if you’ve come across a website that you feel is too good to keep to yourself, please don’t hesitate to share it in the comments below. We’d love to hear about it!

Plant Guides, From A To Z

Every office, and that includes home office as well, is better off with a lovely selection of beautiful plants. But which plants are easier to deal with for some of us who tend to be forgetful? Which ones require more care, and if so, what does it usually involve?

How Many Plants is a wonderful resource that covers all these questions well. It provides a thorough overview of all popular plants, sorted alphabetically and by care difficulty. You can even filter out plants based on their features (size, format, placement), plant type (traits, origins, pet-friendly) and leaf look (shape and surface). A great reference site to keep nearby.

Covid Art Museum

Of course, design isn’t quite like art. While design tries to solve a particular problem, art makes us think and feel — provoking us and questioning the status quo. But art can also bring around new perspectives and change in times when it’s so much needed.

The Covid Art Museum is a growing online exhibition of art born during Covid-19 quarantine, now with 238 contributions by people from all around the world. Often it’s an attempt to cope with the world around us, and perhaps take a slightly different perspective of how the changed world changed our perception of the world and our lives.

Museum Of Annoying Experiences

How often do you feel frustrated these days? How often do you open a browser window just to find yourself stuck identifying fire hydrants and understanding confusing sentences? Or perhaps calling a customer support service just to be put on hold for half an hour (at best)?

The Museum of Annoying Experiences takes us on a journey to the year 3000 when bad customer service is nothing but a distant memory, only observable in the exhibits that show how things used to be in the past (well, today) when most interactions were incredibly annoying. Each exhibit is interactive and playful, taking a fun look at frustrations around us. Who knows: hopefully in the year 3000, all these annoying experiences will indeed be distant.

The Musical Time Machine

It’s still quite difficult to travel back in time, but fortunately, we can do so online. What if you wanted to listen to the pop charts extravaganza from the US back in 1955 or Uzbekistan in 1932? Well, Radiooooo has got your back (well, you might need to sign up for a free basic plan first).

The website is a collection of songs collected over decades and now searchable, with filters by genre, speed, country, and time period. In fact, you can search by slow for chilling, fast for dancing, and weird music for bugging out — indeed, there is something for everyone! And if you want to go fancy, there is a shuffle mode, with songs picked by the curators.

UX Misconceptions And Laws

When we design experiences on the web, usually we rely on things that worked well in the past. Of course, we don’t know for sure how well our solutions worked, and we don’t know if they’d perform well next time around. But out of our experiences views emerge, and then as they find ground, they become more established over time. And sometimes, this is exactly how misconceptions appear.

“10 misconceptions on UX” highlights common views and data around infinite scrolling, making everything accessible from the homepage, original design, mobile-first and user interviews, among others. Admittedly, the creators of the site are quite opinionated, and you might disagree with some statements, but the website is fun to play with, and there are dozens of random fun facts to explore as well.

Also, if you’d like to deep-dive into common principles and heuristics of UX, Jon Yablonski has collected dozens of Laws of UX in his beautiful website, featuring everything from Hick’s Law and Law of Common Region to Tesler’s Law and Zeigarnik Effect. Wonderful resources worth keeping close!

The Timeline Of The Web

The web has been going through quite a few changes over the last three decades. You might remember Perl 5, Firebug, Backbone.js, and the end of Flash, but very often most things we’ve experienced on the web appear quite blurry, as they were changing so quickly.

In The History of the Web, Jay Hoffman, with illustrations by Katerina Limpitsouni, celebrates the most important events in the web’s young history. It’s an evolving timeline that charts the events on a timeline, with useful resources and links to follow-up and review. A lovely little project to keep bookmarked.

Sounds To Help You Focus

Staying focused might easily be one of the biggest challenges when you need to get work done. If you’re working from home and are missing the familiar office sounds, I Miss The Office brings some office atmosphere into your home office — with virtual colleagues who produce typical sounds like typing, squeaking chairs, or the occasional bubbling of the watercooler.

The Boat: A Powerful Piece Of Storytelling

Some stories are so dense, so intense, that they capture you and don’t let you go. “The Boat” is such a story. Based on the short story by Nam Le, “The Boat” combines animation, audio, and ink and charcoal drawings into a powerful, interactive graphic novel.

The story told is the one of Mai, a girl whose parents send her alone on a boat to Australia after the Vietnam War. And, well, the storytelling experience really is exceptional. Each little element, each thoroughly applied animation contributes to creating an atmosphere that reflects the fear, despair, but also the hope that is linked to the escape. Take some time and see for yourself. It’s worth it.

Interactive Timeline… In Dots!

Dots, dots, and even more dots. But these are not just any ordinary dots. Every dot is a historic event, so you can just imagine how the whole picture looks like if you step back and take a look at Histography. This impressive interactive timeline spans across 14 billion years of history, from the Big Bang to the 2010s. What started out as a simple project in the Bezalel Academy of Arts and Design by Ronel Mor, has now turned out to be a leading example of what creative timelines can actually look like.

All of the historical events shown in the interface have been drawn from Wikipedia and new recorded events are added on a daily basis. Not only does it allow you to skip between decades to millions of years, but you can also choose to watch a variety of events which have happened in a particular period or target a specific event in time.

Designing A Galaxy Far Far Away

Take 22 Illustrator files that measure 1024 × 465152 px combined, put in 1000 hours of work, and add the story of Star Wars Episode IV. What you’ll get is a project that will make your jaw drop: SWANH. Brought to life by illustrator and graphic novelist Martin Panchaud, SWANH tells the whole story of “Star Wars: A New Hope” in a huge infographic that requires 403.5ft (123m) of scrolling to get from top to bottom. And, well, it’s worth it.

Made up of 157 images, the sheer dimensions of the piece are impressive, and so is the love to detail that Martin Panchaud put into creating the Star Wars universe. But SWANH is more than eye candy for Star Wars lovers. It’s also an experiment that wants to create a contrast to what we usually expect on the web: quickly understandable contexts and short stories. Brilliant.

Design Facts That You Didn’t Know About

Humankind has always created, however, the design craft as we know and practice it today is a rather young discipline. But that doesn’t mean it doesn’t have a lot of stories to tell. The project Design Facts by writer and art director Shane Bzok reveals them by serving bite-sized pieces of design history that you probably haven’t heard of yet.

Did you know, for example, that the logo for the Spanish lollipop company Chupa Chups was designed by Salvador Dali in 1969? That the Adobe founders named their company after a creek that ran behind the house of one of the founders? Or that the logo of the Chanel brand with its interlocking C’s originally adorned the building of a French vineyard and that Coco Chanel was granted permission by the vineyard owner to use it for her brand in the early 20’s? These are only three of the more than 130 surprising and informative design facts that Shane Bzok has collected. Perfect to squeeze into a short coffee break.

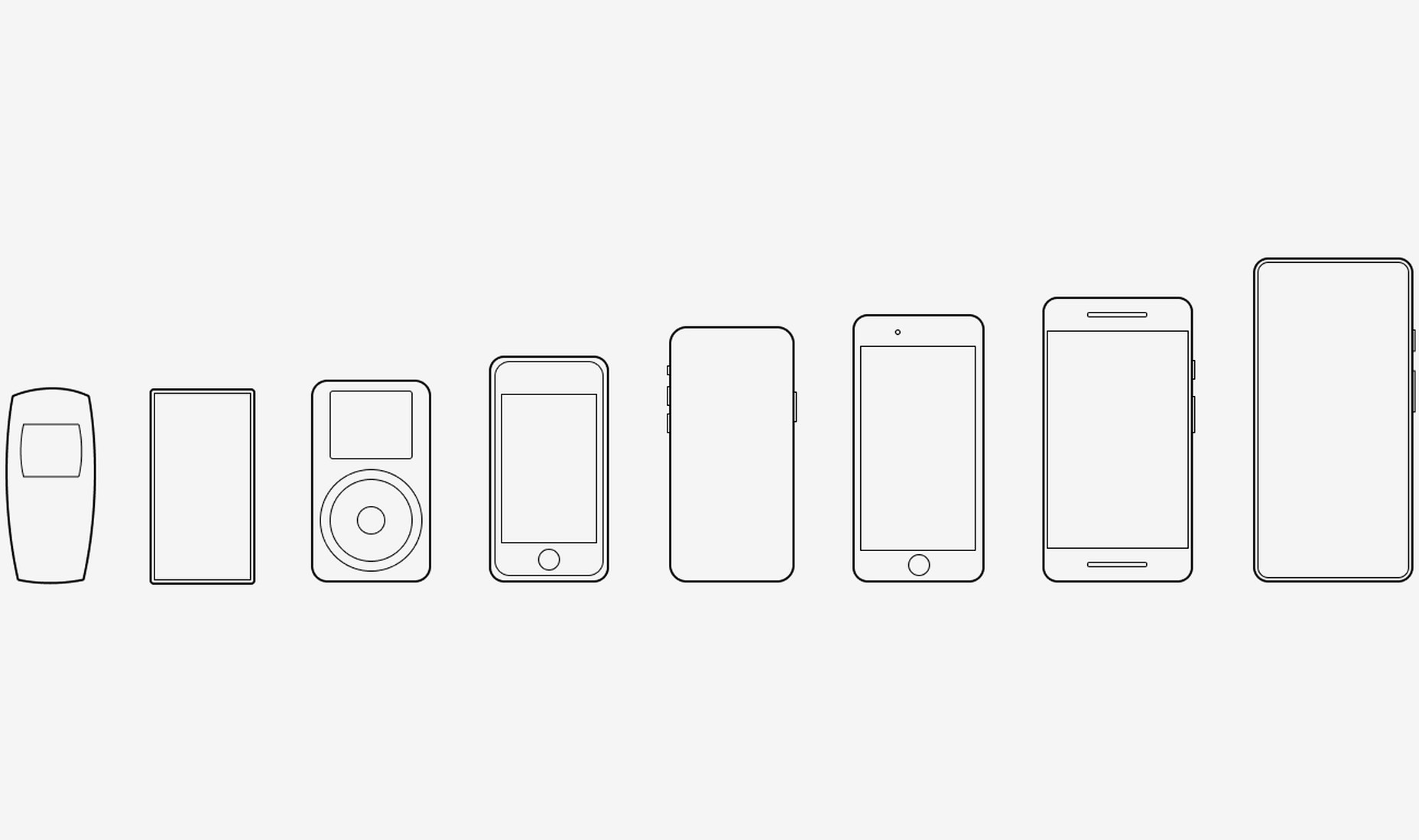

The Beauty Of Vintage Control Panels

An old phone with a dial plate, a tape deck with a grid of buttons, an electricity control room with hundreds of bulbs — vintage electronics have a fascinating charm to them. In praise of all those dials, toggles and buttons that made and shaped the tech design of the past century, Stephen Coles and Norman Hathaway dedicated a Tumblog solely to vintage control panels.

As you’ll see, browsing the collection feels like opening a time capsule. Apart from car dashboards and tech magazine covers of the 80s that still seem (fairly) familiar, you’ll find gems like four-buttoned remote controls from the 40s or retro-futuristic concepts, among them a smartwatch from the 80s that is essentially a shrunken PC worn on the wrist. A fun journey through the history of interface design. Leaves us with the question what people will think about our state-of-the-art gadgets and UIs in 50 years from now.

Little Moments Of Happiness

Did you ever cool off a lion with a fan? It might sound weird, but, well, we did. And what can we say? The lion loved it! The refreshing breeze made his mane dance and brought a big smile to his face. Don’t believe it? Well, go ahead, and try for yourself.

The lion is part of the WebGL project named “Moments of Happiness”, which was brought to life by EPIC Agency. He and five of his animal friends — a sneezing dragon, a playful cat, a paranoid bird, a valorous rabbit and a mighty fish — are bound to put a smile to your face, too, as you interact with them. To breathe life into the odd yet lovable bunch, the experiments use Three.js and the GSAP Library. If you want to take a closer look under the hood, the source codes are available on Codepen. Watch out, though: They are not fully optimized and might not work in some browsers or devices.

Monochromatic Eye Candy

Who doesn’t love some good eye candy? If you need some fresh inspiration, be sure to stop by the Tumblr of The Afrix. Curated by designer Tom Wysocki, the Tumblr resembles a well-balanced exhibition of opposites — black and white, strict geometry and fluent, organic shapes — joining up to build a harmonious whole.

Among the works, you’ll find actual designs for portfolio websites and detailed illustrations, but also rather abstract and seemingly random digital experiments. It’s that mix of the unforeseen that makes the showcase so refreshing despite its monochromatic color palette. Beautiful works of art with a mysterious touch.

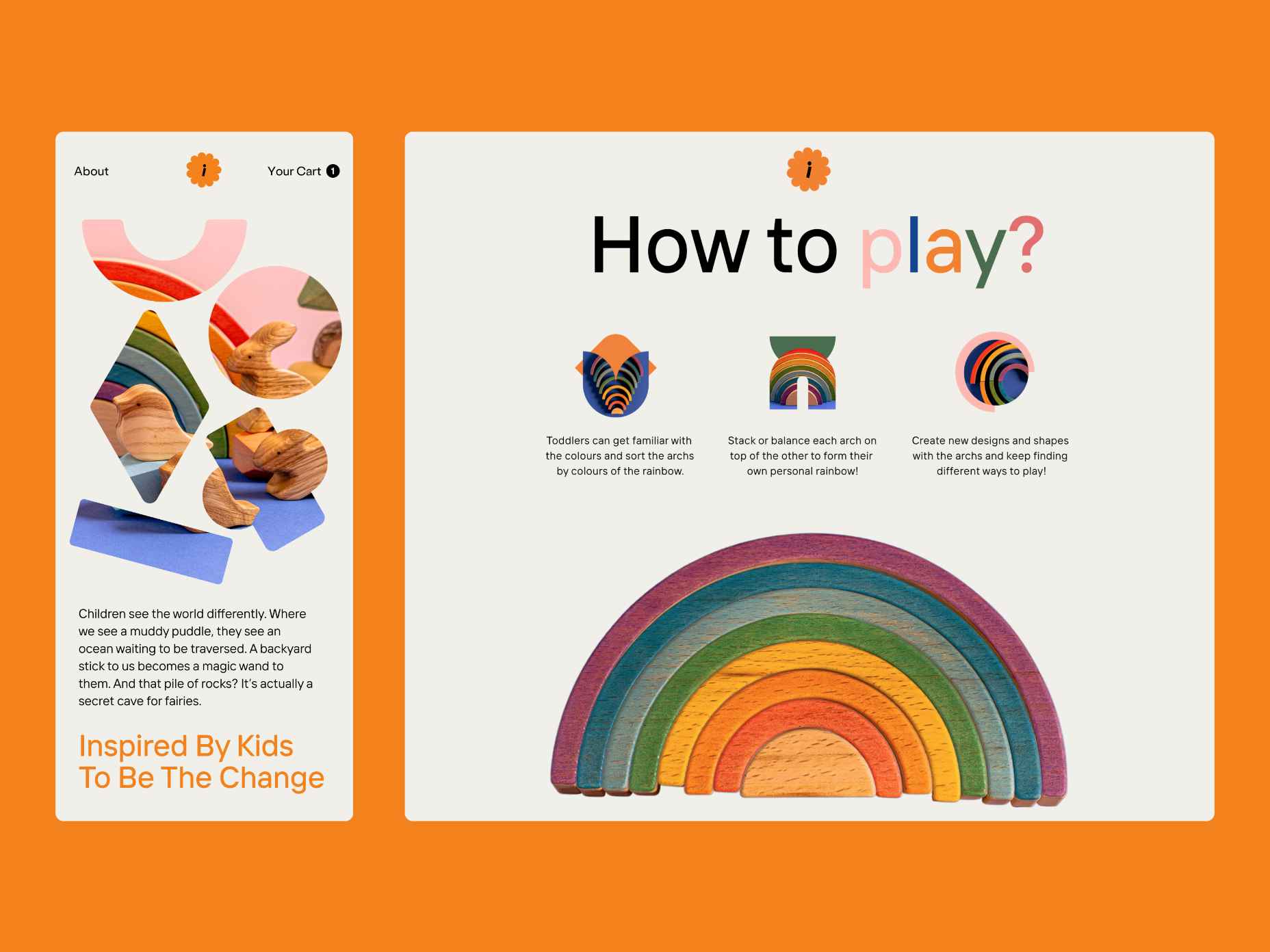

An Alphabetical Adventure

“A” is for “Albert”, “B” is for “Bounce”, “C” for “Cowabunga”. If you have no idea what all of this is about, well, no worries, we’ll tell you: it’s the beginning of a very special piece of eye candy. Brought to life by design agency Studio Lovelock, “A Is For Albert” explores the moments of happiness — and the little mishaps — that life with kids brings along — with an animated alphabet.

Each letter from A to Z tells the story of how Albert, a blonde little boy, explores the world in his own cute yet chaotic (and seen from his parents’ perspective sometimes maybe even a bit annoying) way. He decorates the livingroom wallpaper with his brush artworks, for example, and shows his love for the family cat by hugging it a bit too tight. Simple geometric shapes and a soft color palette are everything the project needs to breathe life into Albert’s (and his parents’) everyday adventures and make us smile.

Blending Past And Present

Maps can do more than help us find the way. They are witnesses of their time and, when we look at old maps, it’s like taking a trip back into long forgotten days. Now imagine that you had a magic spyglass that could show you what your neighborhood looked like 100 years ago. You’d only need to get out a recent map, hover your spyglass over it, and see what has changed.

Well, actually, that’s possible. The National Library of Scotland provides a browser-based tool that lets you jump between the same area on a recent and a vintage map just looking through a (digital) spyglass. The service works for maps of Great Britain, Scotland, England, and Wales. A fantastic way to see the world (and maybe even your neighborhood) from a different perspective.

Do You Have The Design Eye?

So, you think no-one is better than you when it comes to assessing if something is centered or slightly off? Well, then here’s a challenge for you: It’s Centred That. The little game created by the folks at the UX design and web development studio Supremo takes your design eye to the ultimate test: You’re presented with shapes and need to decide if the dot is placed in the center. But beware, what sounds easy, is actually harder than you’d think. Will you make it through all 10 levels?

Patterns In Islamic Art

The Islamic world has brought forth an incredibly rich heritage of architectural decoration, a heritage that deserves to be better known and that has a lot to offer not only to art historians, as David Wade points out. To make the beauty accessible to everyone, he started Pattern in Islamic Art, a showcase of more than 4,000 images of patterns and other design features drawn from this artistic tradition. No matter if you are up for some eye candy or want to investigate the underlying construction of the complex geometries, the site is a real treasure chest.

Print Design Inspiration From The Past

Typography, layout, color, patterns — vintage magazines provide an endless source of inspiration. If you’re up for some eye candy, the folks at Present & Correct have collected a selection of print design goodies over time.

Among them are covers from the East German design magazine Form + Zweck which was published between 1956 and 1990, just like covers of Switzerland’s oldest typographic journal Typographische Monatsblätter. The Japanese magazine Industrial Art News with its bold and vibrant cover art is also part of the collection. For some more contemporary inspiration, be sure to also check out the site of the Japanese IDEA magazine where you can peek inside past issues and even browse them by keyword. Eye candy to get lost in.

A Curated Gallery Of Patterns

When bold colors meet subtle palettes, organic curves appear next to sharp-edged geometric forms, and minimalist designs face playful artworks, inspiration isn’t far. If you’re up for a surprise bag of inspiration, Pattern Collect is for you. The site curates beautifully illustrated patterns created by designers from across the globe.

You can browse the showcase by tag and, if you like an artwork, a link takes you to the original on Dribbble or Behance where you can learn more about the illustrator and their work. Who knows, maybe this will even turn out to be the opportunity to find creative talent to work with on an upcoming project?

A Trip Back To The Early-Days Of Computing

You’re in the mood for some tech nostalgia? Well, then PCjs will be your kind of thing. The open-source project revives the times when computers came with a monochrome display and ran on 4.77Mhz and 64KB of RAM. And the best: It’s no showcase, but you can actually interact with the machines right in your browser. The simulations of the Original IBM PC from 1981 and the OSI Challenger 1P from 1978 were written entirely in JavaScript and require no additional plugins — no Java, no Flash.

The pre-configured machines are ready to run BASIC, DOS, Windows 1.01, and assorted non-DOS software, and, if that’s not enough control for you yet, you can even build your own PC. The goal of the project is to help people understand how these early computers worked and to make it easy to experiment with them. It also provides a platform for running and analyzing older software. Now that’s really a trip down the memory lane.

A Rainbow Of Cover Artwork

By pairing hex color values with album cover art of 2020, you’ll have the foundation for a very special project: Album Colors Of The Year. It arranges some of last year’s album releases by color to create a rainbow of cover artwork.

Lady Gaga’s album “Chromatica”, for instance, is a case of #ed4c73, Suuns’ “Fiction” shines in #e489b3, and Avalon Emerson’s “DJ-Kicks” screams #f8bb04. In times when album covers often live rather unnoticed in the corner of our smartphone screens, it is nice to see their artwork in the center of attention for a change. A great place to seek fresh inspiration — or just discovering some new tunes to get you through a lengthy coding session.

Teletext Time Travel

Do you remember the times when you switched to the teletext for the weather forecast or the sports results? The loud colors on the black background, pixel art graphics, and flashing text? (Well, you might not, and it’s perfectly fine!) The Teletext Museum is the perfect place to revive these memories or discover them (if you live outside Europe, for example).

If we didn’t have the web, most of us would still be teletext designers and developers since essentially each teletext page is a box with content in it. Sounds familiar? Well, the gallery with images from teletext services from around the world illustrates how the interface design has evolved over time and a timeline gives you more information on what exactly changed and how.

If you ever wanted to take on the role of a teletext designer, well, you can do that, too. Jason Robertson who recovers old teletext data from VHS cassettes in a complicated and time-consuming process provides a plethora of teletext pages from the 80’s and 90’s. Some of them can be edited right in the browser. The process needs some getting used to, but it’s definitely a fun trip back in time.

The Lives Of Famous Painters

When we hear names like Picasso, Dalí, or Miró, we immediately remember some of their paintings. But what do we actually know about the artists behind the masterpieces? About their lives and love, the events that shaped them and their works? To visualize painter’s lives, information designer Giorgia Lupi and her team at Accurat teamed up with illustrator Michaela Buttignol. The result of the collaboration is a stunning series of minimalist infographics that boil the biographies of ten famous painters down to their cornerstones.

The visualizations depict key moments — births, deaths, love affairs, marriages, children, travels — but also interesting tidbits such as astrological sign, left/right handedness as well as connections and influences. By picking up the characteristic colors and other stylistic preferences of each artist, the designs also reflect the painters’ styles. A fun way to dive deeper into the history of art. If you’d like to learn more about creating engaging infographics like this one, you should also check out Giorgia Lupi’s article on the aesthetics of data narratives.

The Museum Of The World

The Rosetta Stone, the Parthenon sculptures, Egyptian mummies — all of them cornerstones of human culture which can be admired in the British Museum today. Comprising more than 2,000,000 years of human history, its collection is exceptional and one of the largest of its kind. To make that cultural heritage accessible to more people from all over the world, the British Museum has partnered up with Google. The result: the Museum Of The World.

The WebGL-powered desktop experience explores connections between the world’s cultures by showcasing exhibits that shaped human history. As you travel deeper into the history of mankind with each scroll, you can browse the artefacts according to type and area of origin — no matter where in the world they might be located. Stunning.

Bringing Imaginations To Life

Guess what happens if 100 kids draw monsters and 100 illustrators bring those imaginations to life? Probably something hilarious and very refreshing. Katherine Johnson did just that: She invites Elementary students to draw monsters, and once their creations have taken shape, she works with illustrators to bring them to life in their unique artistic styles.

The ultimate goal of The Monster Project as the project is called is to help children recognize the value of their ideas and make them feel excited about the creative potential of their own minds. At the moment, the site features over 100 monsters created by over 100 artists from all over the world. Now, are you feeling inspired already?

Every day design fans submit incredible industry stories to our sister-site, Webdesigner News. Our colleagues sift through it, selecting the very best stories from the design, UX, tech, and development worlds and posting them live on the site.

Every day design fans submit incredible industry stories to our sister-site, Webdesigner News. Our colleagues sift through it, selecting the very best stories from the design, UX, tech, and development worlds and posting them live on the site.

It’s normal to pull up sharp in front of a problem; after all, if there was a known solution, it wouldn’t be a problem. But knowing that it’s normal, doesn’t make encountering problems any less frustrating. So how do we avoid sitting in front of a UX problem for hours, achieving nothing?

It’s normal to pull up sharp in front of a problem; after all, if there was a known solution, it wouldn’t be a problem. But knowing that it’s normal, doesn’t make encountering problems any less frustrating. So how do we avoid sitting in front of a UX problem for hours, achieving nothing?

Currently, Bootstrap is among the most popular frameworks in the developer community. One of the most straightforward solutions for front-end development methods available, Bootstrap allows users to access convenient CSS and HTML components for forms, typography, sliders, navigation, and everything else you might need.

Currently, Bootstrap is among the most popular frameworks in the developer community. One of the most straightforward solutions for front-end development methods available, Bootstrap allows users to access convenient CSS and HTML components for forms, typography, sliders, navigation, and everything else you might need.