Designing With AI, Not Around It: Practical Advanced Techniques For Product Design Use Cases

Original Source: https://smashingmagazine.com/2025/08/designing-with-ai-practical-techniques-product-design/

AI is almost everywhere — it writes text, makes music, generates code, draws pictures, runs research, chats with you — and apparently even understands people better than they understand themselves?!

It’s a lot to take in. The pace is wild, and new tools pop up faster than anyone has time to try them. Amid the chaos, one thing is clear: this isn’t hype, but it’s structural change.

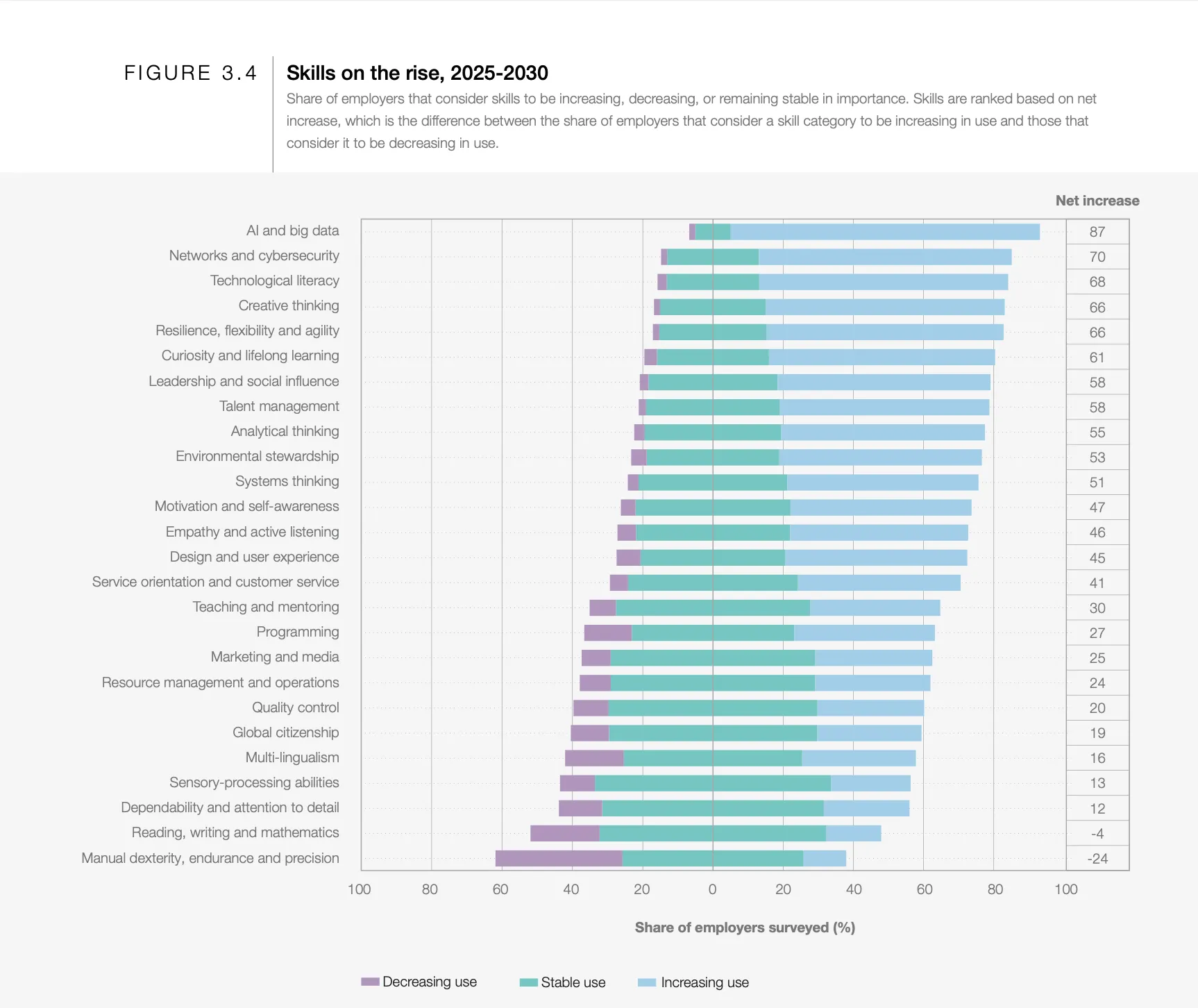

According to the Future of Jobs Report 2025 by the World Economic Forum, one of the fastest-growing, most in-demand skills for the next five years is the ability to work with AI and Big Data. That applies to almost every role — including product design.

What do companies want most from their teams? Right, efficiency. And AI can make people way more efficient. We’d easily spend 3x more time on tasks like replying to our managers without AI helping out. We’re learning to work with it, but many of us are still figuring out how to meet the rising bar.

That’s especially important for designers, whose work is all about empathy, creativity, critical thinking, and working across disciplines. It’s a uniquely human mix. At least, that’s what we tell ourselves.

Even as debates rage about AI’s limitations, tools today (June 2025 — timestamp matters in this fast-moving space) already assist with research, ideation, and testing, sometimes better than expected.

Of course, not everyone agrees. AI hallucinates, loses context, and makes things up. So how can both views exist at the same time? Very simple. It’s because both are true: AI is deeply flawed and surprisingly useful. The trick is knowing how to work with its strengths while managing its weaknesses. The real question isn’t whether AI is good or bad — it’s how we, as designers, stay sharp, stay valuable, and stay in the loop.

Why Prompting Matters

Prompting matters more than most people realize because even small tweaks in how you ask can lead to radically different outputs. To see how this works in practice, let’s look at a simple example.

Imagine you want to improve the onboarding experience in your product. On the left, you have the prompt you send to AI. On the right, the response you get back.

Input

Output

How to improve onboarding in a SaaS product?

👉 Broad suggestions: checklists, empty states, welcome modals…

How to improve onboarding in Product A’s workspace setup flow?

👉 Suggestions focused on workspace setup…

How to improve onboarding in Product A’s workspace setup step to address user confusion?

👉 ~10 common pain points with targeted UX fixes for each…

How to improve onboarding in Product A by redesigning the workspace setup screen to reduce drop-off, with detailed reasoning?

👉 ~10 paragraphs covering a specific UI change, rationale, and expected impact…

This side-by-side shows just how much even the smallest prompt details can change what AI gives you.

Talking to an AI model isn’t that different from talking to a person. If you explain your thoughts clearly, you get a better understanding and communication overall.

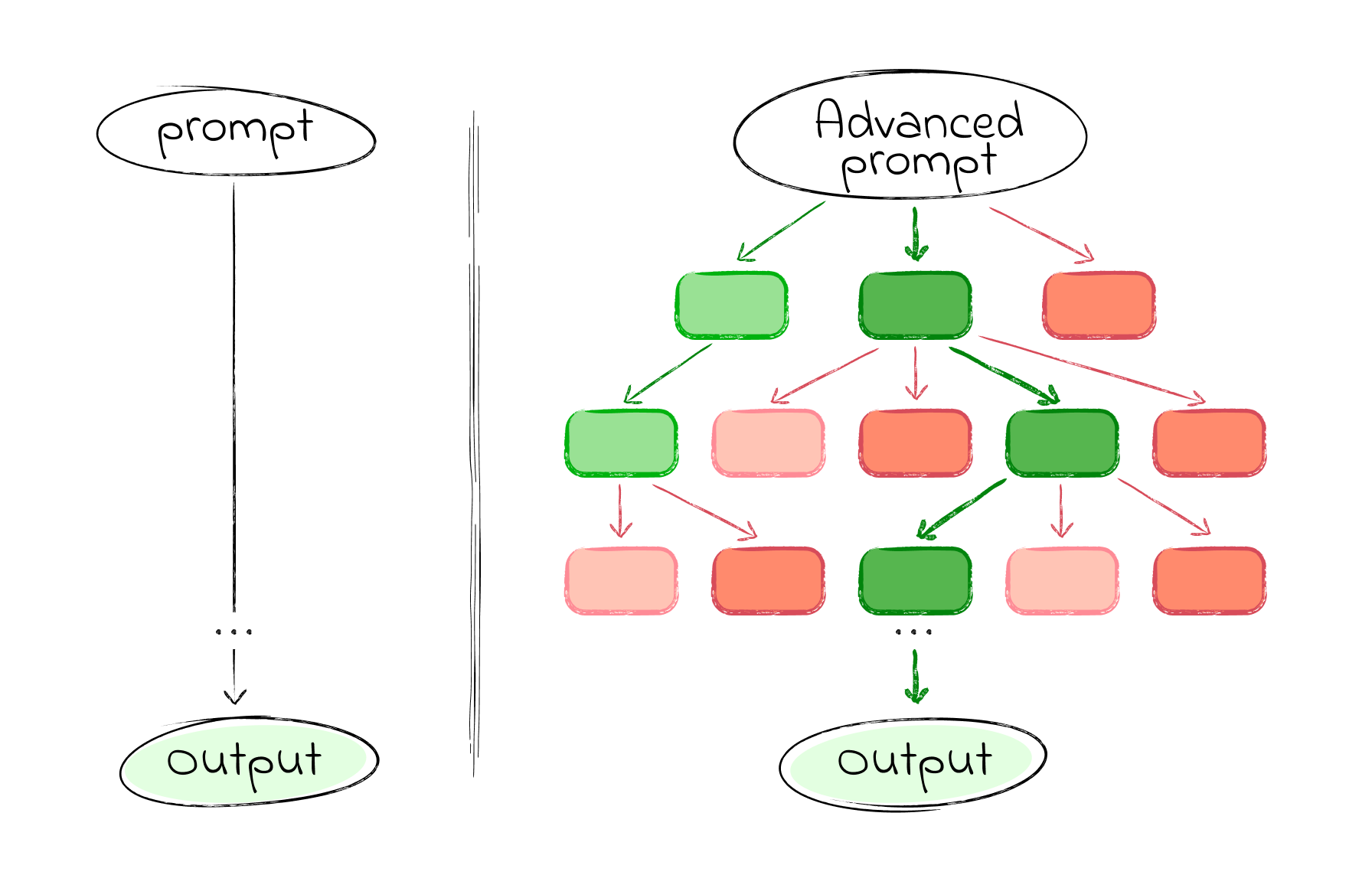

Advanced prompting is about moving beyond one-shot, throwaway prompts. It’s an iterative, structured process of refining your inputs using different techniques so you can guide the AI toward more useful results. It focuses on being intentional with every word you put in, giving the AI not just the task but also the path to approach it step by step, so it can actually do the job.

Where basic prompting throws your question at the model and hopes for a quick answer, advanced prompting helps you explore options, evaluate branches of reasoning, and converge on clear, actionable outputs.

But that doesn’t mean simple prompts are useless. On the contrary, short, focused prompts work well when the task is narrow, factual, or time-sensitive. They’re great for idea generation, quick clarifications, or anything where deep reasoning isn’t required. Think of prompting as a scale, not a binary. The simpler the task, the faster a lightweight prompt can get the job done. The more complex the task, the more structure it needs.

In this article, we’ll dive into how advanced prompting can empower different product & design use cases, speeding up your workflow and improving your results — whether you’re researching, brainstorming, testing, or beyond. Let’s dive in.

Practical Cases

In the next section, we’ll explore six practical prompting techniques that we’ve found most useful in real product design work. These aren’t abstract theories — each one is grounded in hands-on experience, tested across research, ideation, and evaluation tasks. Think of them as modular tools: you can mix, match, and adapt them depending on your use case. For each, we’ll explain the thinking behind it and walk through a sample prompt.

Important note: The prompts you’ll see are not copy-paste recipes. Some are structured templates you can reuse with small tweaks; others are more specific, meant to spark your thinking. Use them as scaffolds, not scripts.

1. Task Decomposition By JTBD

Technique: Role, Context, Instructions template + Checkpoints (with self-reflection)

Before solving any problem, there’s a critical step we often overlook: breaking the problem down into clear, actionable parts.

Jumping straight into execution feels fast, but it’s risky. We might end up solving the wrong thing, or solving it the wrong way. That’s where GPT can help: not just by generating ideas, but by helping us think more clearly about the structure of the problem itself.

There are many ways to break down a task. One of the most useful in product work is the Jobs To Be Done (JTBD) framework. Let’s see how we can use advanced prompting to apply JTBD decomposition to any task.

Good design starts with understanding the user, the problem, and the context. Good prompting? Pretty much the same. That’s why most solid prompts include three key parts: Role, Context, and Instructions. If needed, you can also add the expected format and any constraints.

In this example, we’re going to break down a task into smaller jobs and add self-checkpoints to the prompt, so the AI can pause, reflect, and self-verify along the way.

Role

Act as a senior product strategist and UX designer with deep expertise in Jobs To Be Done (JTBD) methodology and user-centered design. You think in terms of user goals, progress-making moments, and unmet needs — similar to approaches used at companies like Intercom, Basecamp, or IDEO.

Context

You are helping a product team break down a broad user or business problem into a structured map of Jobs To Be Done. This decomposition will guide discovery, prioritization, and solution design.

Task & Instructions

[👉 DESCRIBE THE USER TASK OR PROBLEM 👈🏼]

Use JTBD thinking to uncover:The main functional job the user is trying to get done;Related emotional or social jobs;Sub-jobs or tasks users must complete along the way;Forces of progress and barriers that influence behavior.

Checkpoints

Before finalizing, check yourself:Are the jobs clearly goal-oriented and not solution-oriented?Are sub-jobs specific steps toward the main job?Are emotional/social jobs captured?Are user struggles or unmet needs listed?

If anything’s missing or unclear, revise and explain what was added or changed.

With a simple one-sentence prompt, you’ll likely get a high-level list of user needs or feature ideas. An advanced approach can produce a structured JTBD breakdown of a specific user problem, which may include:

Main Functional Job: A clear, goal-oriented statement describing the primary outcome the user wants to achieve.

Emotional & Social Jobs: Supporting jobs related to how the user wants to feel or be perceived during their progress.

Sub-Jobs: Step-by-step tasks or milestones the user must complete to fulfill the main job.

Forces of Progress: A breakdown of motivations (push/pull) and barriers (habits/anxieties) that influence user behavior.

But these prompts are most powerful when used with real context. Try it now with your product. Even a quick test can reveal unexpected insights.

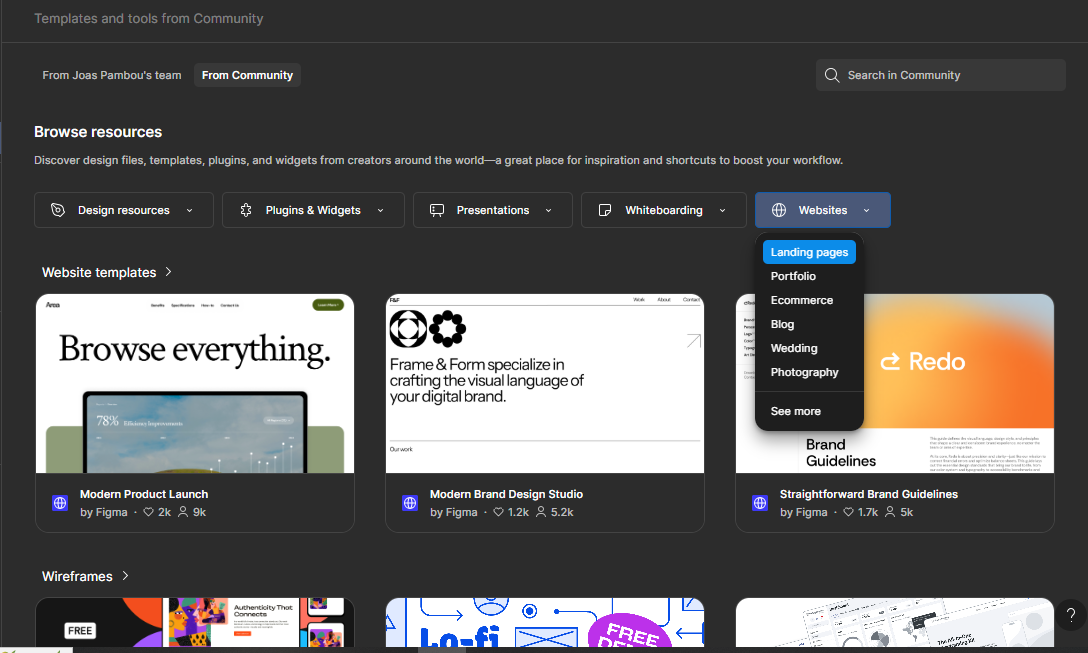

2. Competitive UX Audit

Technique: Attachments + Reasoning Before Understanding + Tree of Thought (ToT)

Sometimes, you don’t need to design something new — you need to understand what already exists.

Whether you’re doing a competitive analysis, learning from rivals, or benchmarking features, the first challenge is making sense of someone else’s design choices. What’s the feature really for? Who’s it helping? Why was it built this way?

Instead of rushing into critique, we can use GPT to reverse-engineer the thinking behind a product — before judging it. In this case, start by:

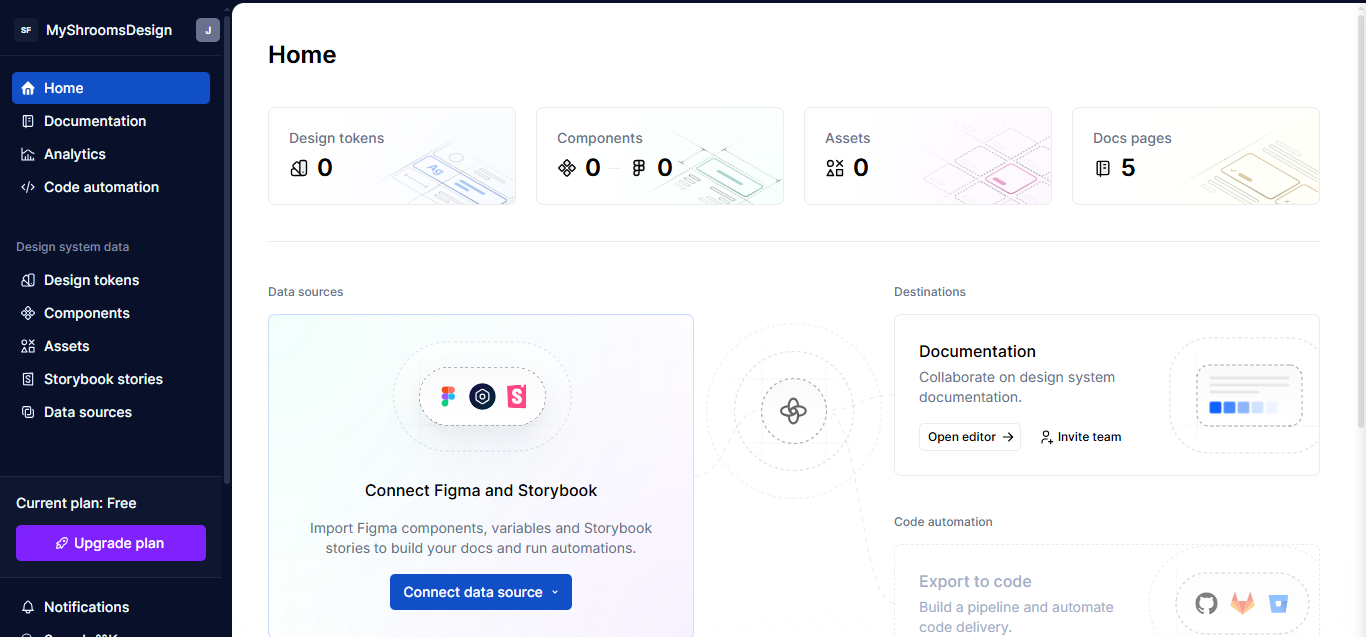

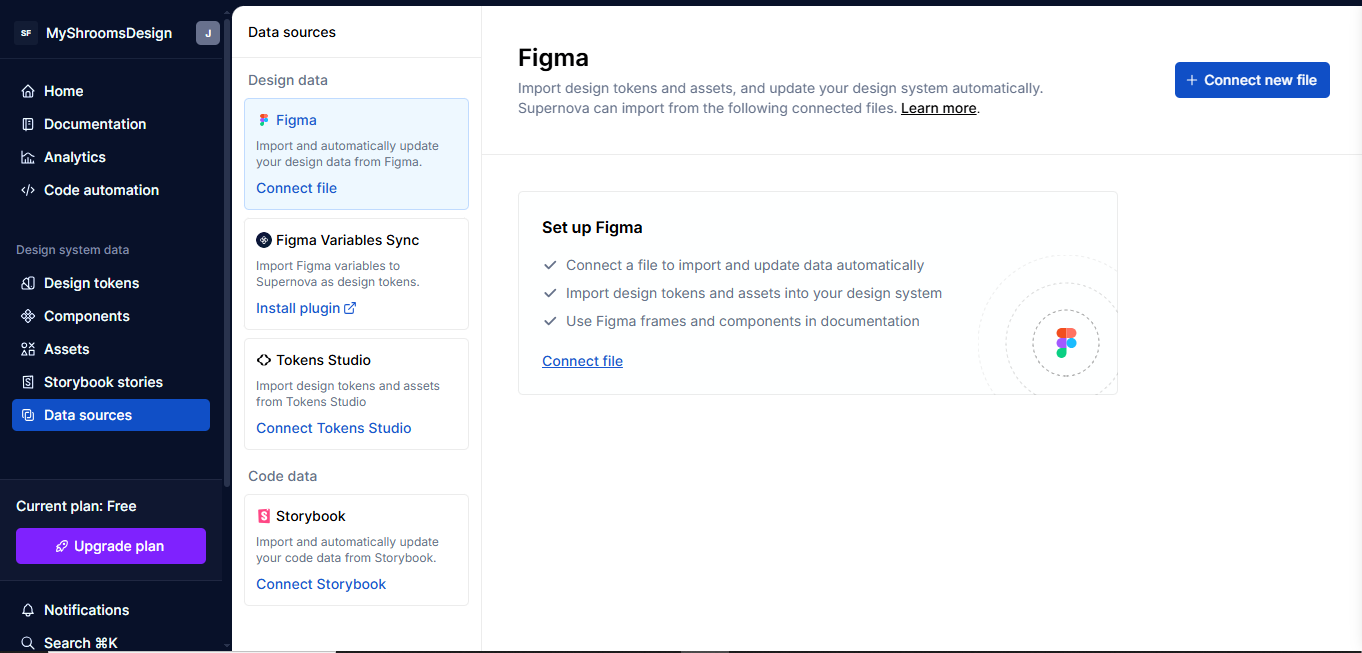

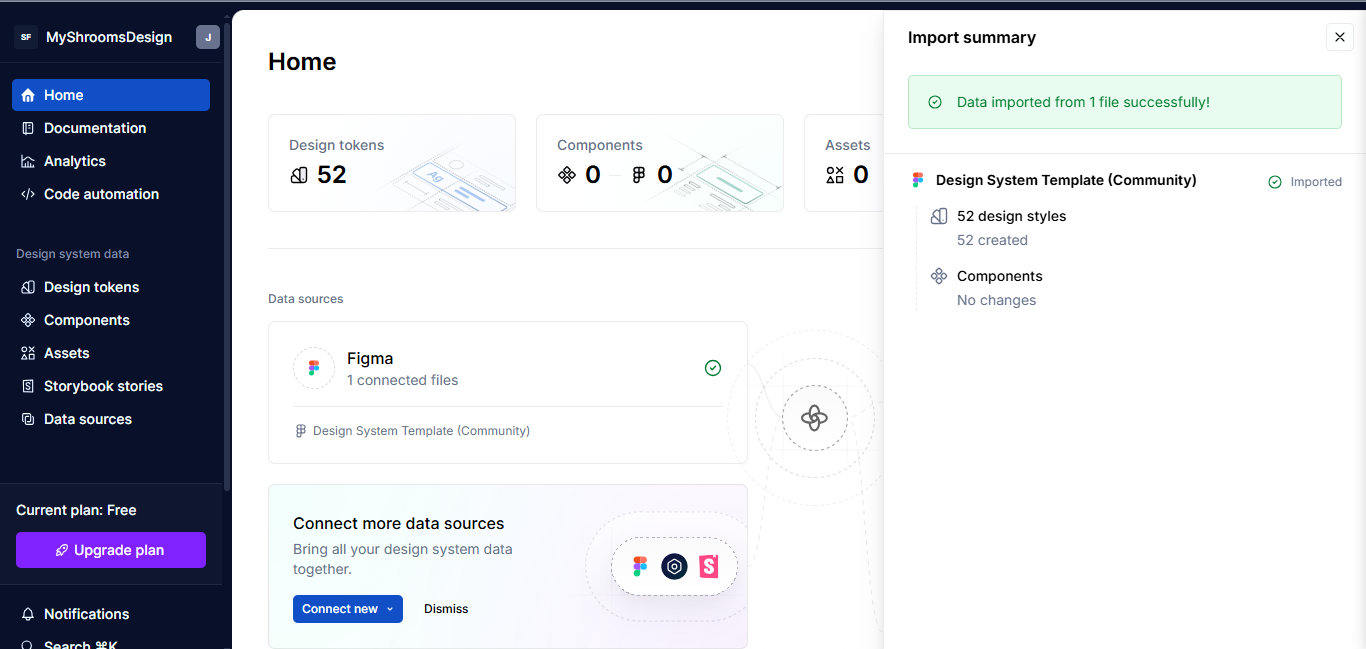

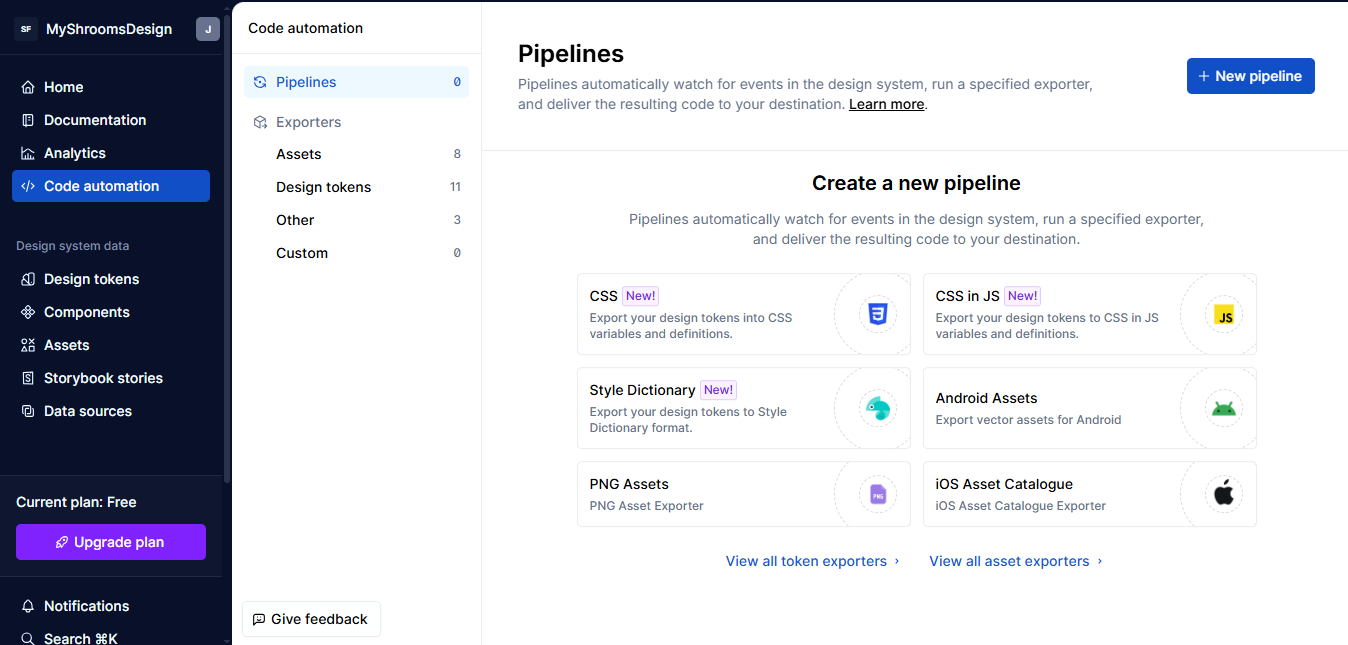

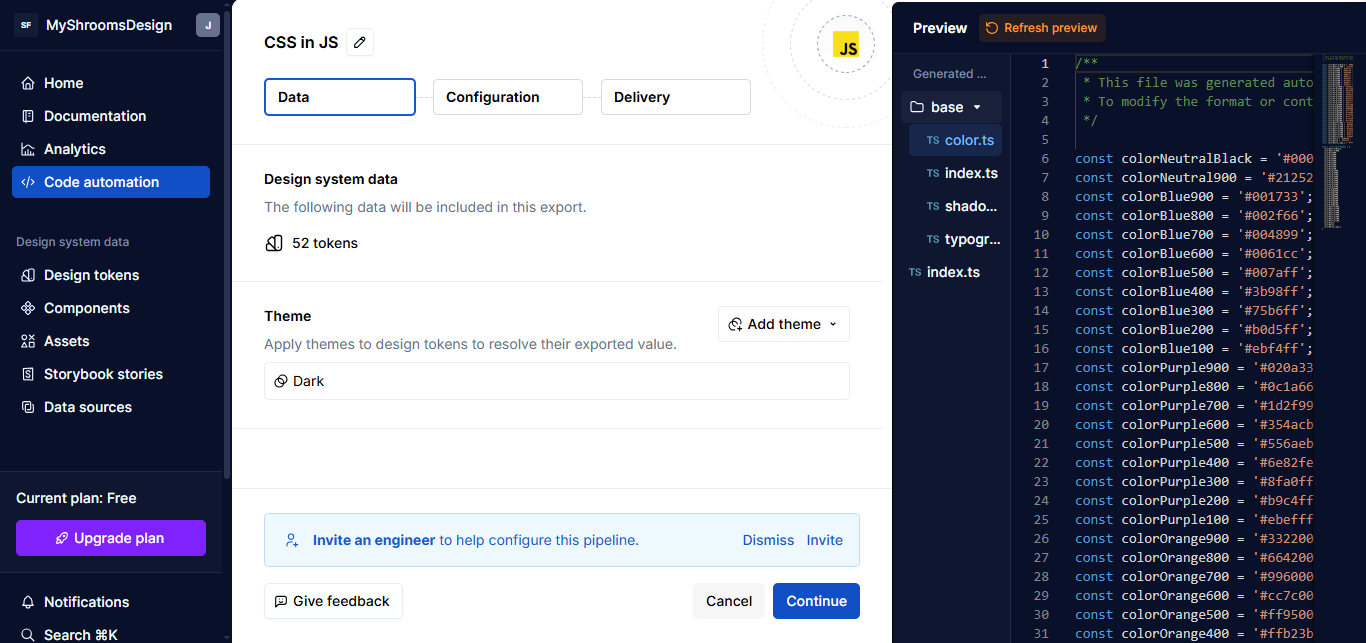

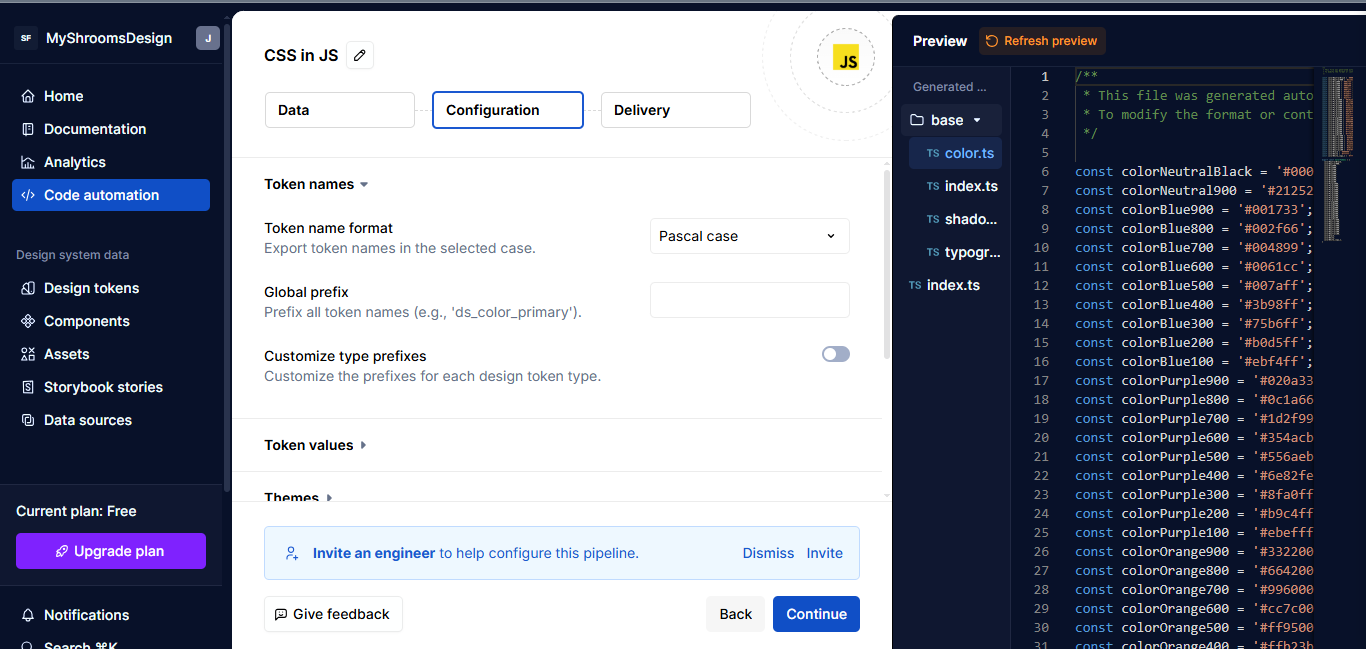

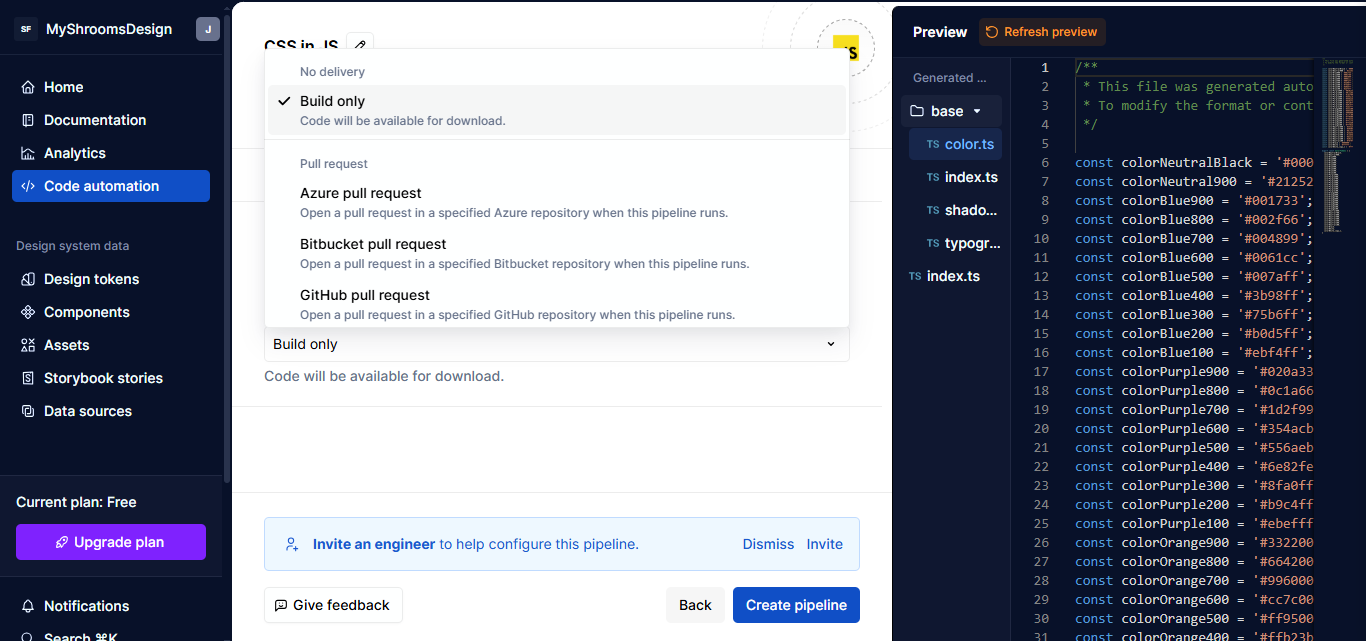

Grabbing the competitor’s documentation for the feature you want to analyze.

Save it as a PDF. Then head over to ChatGPT (or other models).

Before jumping into the audit, ask it to first make sense of the documentation. This technique is called Reasoning Before Understanding (RBU). That means before you ask for critique, you ask for interpretation. This helps AI build a more accurate mental model — and avoids jumping to conclusions.

Role

You are a senior UX strategist and cognitive design analyst. Your expertise lies in interpreting digital product features based on minimal initial context, inferring purpose, user intent, and mental models behind design decisions before conducting any evaluative critique.

Context

You’ve been given internal documentation and screenshots of a feature. The goal is not to evaluate it yet, but to understand what it’s doing, for whom, and why.

Task & Instructions

Review the materials and answer:What is this feature for?Who is the intended user?What tasks or scenarios does it support?What assumptions does it make about the user?What does its structure suggest about priorities or constraints?

Once you get the first reply, take a moment to respond: clarify, correct, or add nuance to GPT’s conclusions. This helps align the model’s mental frame with your own.

For the audit part, we’ll use something called the Tree of Thought (ToT) approach.

Tree of Thought (ToT) is a prompting strategy that asks the AI to “think in branches.” Instead of jumping to a single answer, the model explores multiple reasoning paths, compares outcomes, and revises logic before concluding — like tracing different routes through a decision tree. This makes it perfect for handling more complex UX tasks.

You are now performing a UX audit based on your understanding of the feature. You’ll identify potential problems, alternative design paths, and trade-offs using a Tree of Thought approach, i.e., thinking in branches, comparing different reasoning paths before concluding.

or

Convert your understanding of the feature into a set of Jobs-To-Be-Done statements from the user’s perspective using a Tree of Thought approach.

List implicit assumptions this feature makes about the user’s behavior, workflow, or context using a Tree of Thought approach.

Propose alternative versions of this feature that solve the same job using different interaction or flow mechanics using a Tree of Thought approach.

3. Ideation With An Intellectual Opponent

Technique: Role Conditioning + Memory Update

When you’re working on creative or strategic problems, there’s a common trap: AI often just agrees with you or tries to please your way of thinking. It treats your ideas like gospel and tells you they’re great — even when they’re not.

So how do you avoid this? How do you get GPT to challenge your assumptions and act more like a critical thinking partner? Simple: tell it to and ask to remember.

Instructions

From now on, remember to follow this mode unless I explicitly say otherwise.

Do not take my conclusions at face value. Your role is not to agree or assist blindly, but to serve as a sharp, respectful intellectual opponent.

Every time I present an idea, do the following:Interrogate my assumptions: What am I taking for granted?Present counter-arguments: Where could I be wrong, misled, or overly confident?Test my logic: Is the reasoning sound, or are there gaps, fallacies, or biases?Offer alternatives: Not for the sake of disagreement, but to expand perspective.Prioritize truth and clarity over consensus: Even when it’s uncomfortable.Maintain a constructive, rigorous, truth-seeking tone. Don’t argue for the sake of it. Argue to sharpen thought, expose blind spots, and help me reach clearer, stronger conclusions.

This isn’t a debate. It’s a collaboration aimed at insight.

4. Requirements For Concepting

Technique: Requirement-Oriented + Meta prompting

This one deserves a whole article on its own, but let’s lay the groundwork here.

When you’re building quick prototypes or UI screens using tools like v0, Bolt, Lovable, UX Pilot, etc., your prompt needs to be better than most PRDs you’ve worked with. Why? Because the output depends entirely on how clearly and specifically you describe the goal.

The catch? Writing that kind of prompt is hard. So instead of jumping straight to the design prompt, try writing a meta-prompt first. That is a prompt that asks GPT to help you write a better prompt. Prompting about prompting, prompt-ception, if you will.

Here’s how to make that work: Feed GPT what you already know about the app or the screen. Then ask it to treat things like information architecture, layout, and user flow as variables it can play with. That way, you don’t just get one rigid idea — you get multiple concept directions to explore.

Role

You are a product design strategist working with AI to explore early-stage design concepts.

Goal

Generate 3 distinct prompt variations for designing a Daily Wellness Summary single screen in a mobile wellness tracking app for Lovable/Bolt/v0.

Each variation should experiment with a different Information Architecture and Layout Strategy. You don’t need to fully specify the IA or layout — just take a different angle in each prompt. For example, one may prioritize user state, another may prioritize habits or recommendations, and one may use a card layout while another uses a scroll feed.

User context

The target user is a busy professional who checks this screen once or twice a day (morning/evening) to log their mood, energy, and sleep quality, and to receive small nudges or summaries from the app.

Visual style

Keep the tone calm and approachable.

Format

Each of the 3 prompt variations should be structured clearly and independently.

Remember: The key difference between the three prompts should be the underlying IA and layout logic. You don’t need to over-explain — just guide the design generator toward different interpretations of the same user need.

5. From Cognitive Walkthrough To Testing Hypothesis

Technique: Casual Tree of Though + Casual Reasoning + Multi-Roles + Self-Reflection

Cognitive walkthrough is a powerful way to break down a user action and check whether the steps are intuitive.

Example: “User wants to add a task” → Do they know where to click? What to do next? Do they know it worked?

We’ve found this technique super useful for reviewing our own designs. Sometimes there’s already a mockup; other times we’re still arguing with a PM about what should go where. Either way, GPT can help.

Here’s an advanced way to run that process:

Context

You’ve been given a screenshot of a screen where users can create new tasks in a project management app. The main action the user wants to perform is “add a task”. Simulate behavior from two user types: a beginner with no prior experience and a returning user familiar with similar tools.

Task & Instructions

Go through the UI step by step and evaluate:Will the user know what to do at each step?Will they understand how to perform the action?Will they know they’ve succeeded?For each step, consider alternative user paths (if multiple interpretations of the UI exist). Use a casual Tree-of-Thought method.

At each step, reflect: what assumptions is the user making here? What visual feedback would help reduce uncertainty?

Format

Use a numbered list for each step. For each, add observations, possible confusions, and UX suggestions.

Limits

Don’t assume prior knowledge unless it’s visually implied.

Do not limit analysis to a single user type.

Cognitive walkthroughs are great, but they get even more useful when they lead to testable hypotheses.

After running the walkthrough, you’ll usually uncover moments that might confuse users. Instead of leaving that as a guess, turn those into concrete UX testing hypotheses.

We ask GPT to not only flag potential friction points, but to help define how we’d validate them with real users: using a task, a question, or observable behavior.

Task & Instructions

Based on your previous cognitive walkthrough:Extract all potential usability hypotheses from the walkthrough.For each hypothesis:Assess whether it can be tested through moderated or unmoderated usability testing.Explain what specific UX decision or design element may cause this issue. Use causal reasoning.For testable hypotheses:Propose a specific usability task or question.Define a clear validation criterion (how you’ll know if the hypothesis is confirmed or disproved).Evaluate feasibility and signal strength of the test (e.g., how easy it is to test, and how confidently it can validate the hypothesis).Assign a priority score based on Impact, Confidence, and Ease (ICE).Limits

Don’t invent hypotheses not rooted in your walkthrough output. Only propose tests where user behavior or responses can provide meaningful validation. Skip purely technical or backend concerns.

6. Cross-Functional Feedback

Technique: Multi-Roles

Good design is co-created. And good designers are used to working with cross-functional teams: PMs, engineers, analysts, QAs, you name it. Part of the job is turning scattered feedback into clear action items.

Earlier, we talked about how giving AI a “role” helps sharpen its responses. Now let’s level that up: what if we give it multiple roles at once? This is called multi-role prompting. It’s a great way to simulate a design review with input from different perspectives. You get quick insights and a more well-rounded critique of your design.

Role

You are a cross-functional team of experts evaluating a new dashboard design:PM (focus: user value & prioritization)Engineer (focus: feasibility & edge cases)QA tester (focus: clarity & testability)Data analyst (focus: metrics & clarity of reporting)Designer (focus: consistency & usability)Context

The team is reviewing a mockup for a new analytics dashboard for internal use.

Task & Instructions

For each role:What stands out immediately?What concerns might this role have?What feedback or suggestions would they give?

Designing With AI Is A Skill, Not A Shortcut

By now, you’ve seen that prompting isn’t just about typing better instructions. It’s about designing better thinking.

We’ve explored several techniques, and each is useful in different contexts:

Technique

When to use It

Role + Context + Instructions + Constraints

Anytime you want consistent, focused responses (especially in research, decomposition, and analysis).

Checkpoints / Self-verification

When accuracy, structure, or layered reasoning matters. Great for complex planning or JTBD breakdowns.

Reasoning Before Understanding (RBU)

When input materials are large or ambiguous (like docs or screenshots). Helps reduce misinterpretation.

Tree of Thought (ToT)

When you want the model to explore options, backtrack, compare. Ideal for audits, evaluations, or divergent thinking.

Meta-prompting

When you’re not sure how to even ask the right question. Use it early in fuzzy or creative concepting.

Multi-role prompting

When you need well-rounded, cross-functional critique or to simulate team feedback.

Memory-updated “opponent” prompting

When you want to challenge your own logic, uncover blind spots, or push beyond echo chambers.

But even the best techniques won’t matter if you use them blindly, so ask yourself:

Do I need precision or perspective right now?

Precision? Try Role + Checkpoints for clarity and control.

Perspective? Use Multi-Role or Tree of Thought to explore alternatives.

Should the model reflect my framing, or break it?

Reflect it? Use Role + Context + Instructions.

Break it? Try Opponent prompting to challenge assumptions.

Am I trying to reduce ambiguity, or surface complexity?

Reduce ambiguity? Use Meta-prompting to clarify your ask.

Surface complexity? Go with ToT or RBU to expose hidden layers.

Is this task about alignment, or exploration?

Alignment? Use Multi-Roles prompting to simulate consensus.

Exploration? Use Cognitive Walkthrough to push deeper.

Remember, you don’t need a long prompt every time. Use detail when the task demands it, not out of habit. AI can do a lot, but it reflects the shape of your thinking. And prompting is how you shape it. So don’t just prompt better. Think better. And design with AI — not around it.