Original Source: https://smashingmagazine.com/2024/11/desktop-wallpaper-calendars-december-2024/

As the year is coming to a close, many of us feel rushed, meeting deadlines, finishing off projects, or preparing for the upcoming holiday season. So how about some beautiful, wintery desktop wallpapers to sweeten up the month and get you in the mood for December — and the holidays, if you’re celebrating?

More than thirteen years ago, we started our monthly wallpapers series here at Smashing Magazine. It’s the perfect opportunity to put your creative skills to the test but also to find just the right wallpaper to accompany you through the new month. This month is no exception, of course, so following our cozy little tradition, we have a new collection of December wallpapers for you to choose from. All of them were created with love by artists and designers from across the globe and can be downloaded for free.

A huge thank you to everyone who tickled their creativity and shared their designs with us. This post wouldn’t exist without you. ❤️ Happy December!

You can click on every image to see a larger preview.

We respect and carefully consider the ideas and motivation behind each and every artist’s work. This is why we give all artists the full freedom to explore their creativity and express emotions and experience through their works. This is also why the themes of the wallpapers weren’t anyhow influenced by us but rather designed from scratch by the artists themselves.

Submit your wallpaper design! 👩🎨

Feeling inspired? We are always looking for creative talent and would love to feature your desktop wallpaper in one of our upcoming posts. We can’t wait to see what you’ll come up with!

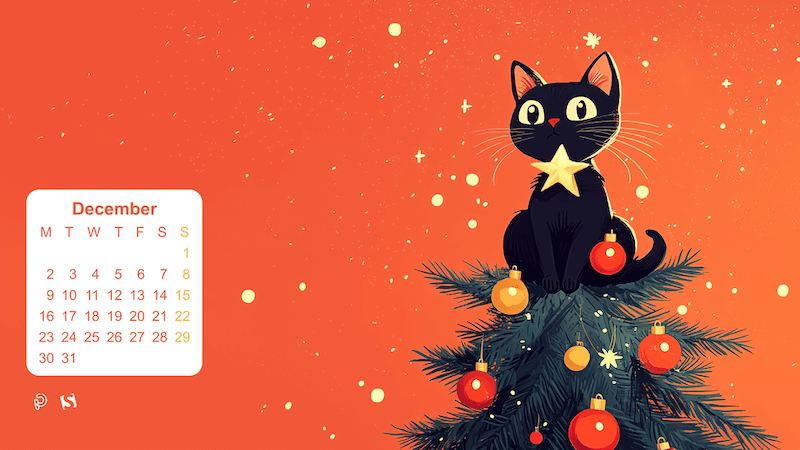

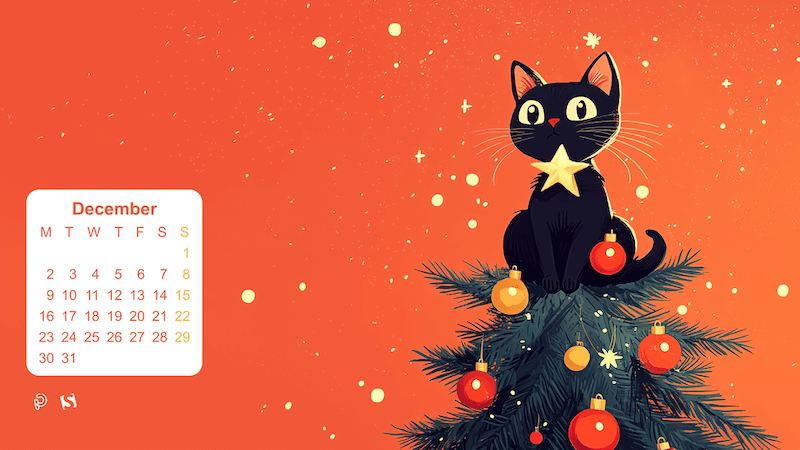

Paws-itively Festive!

“This holiday season, even our furry friends are getting in the spirit! Our mischievous little tree-topper has found the purr-fect perch to keep an eye on all the festivities. May your days be merry, bright, and filled with joyful surprises just like this one!” — Designed by PopArt Studio from Serbia.

preview

with calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

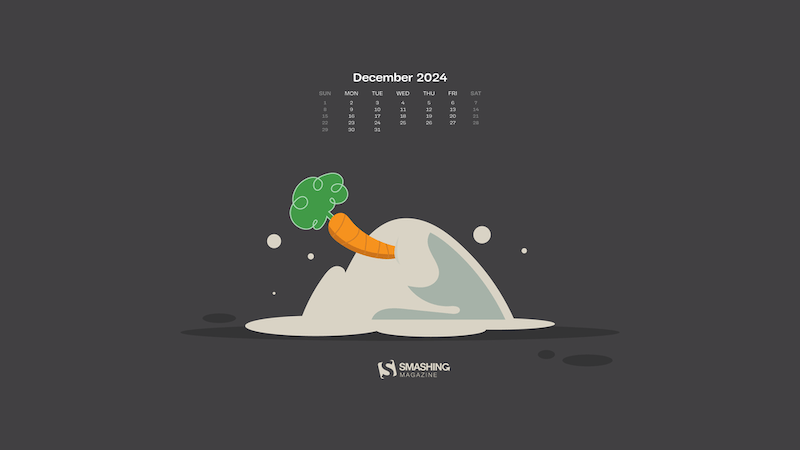

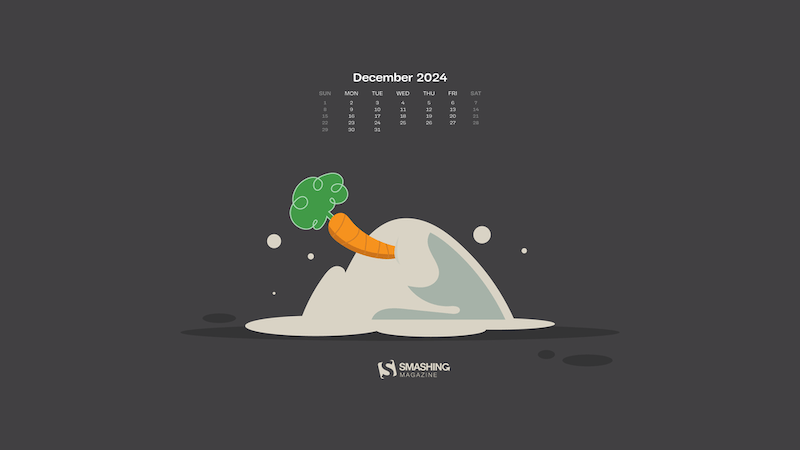

Merry Christmmm…

Designed by Ricardo Gimenes from Spain.

preview

with calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160

without calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160

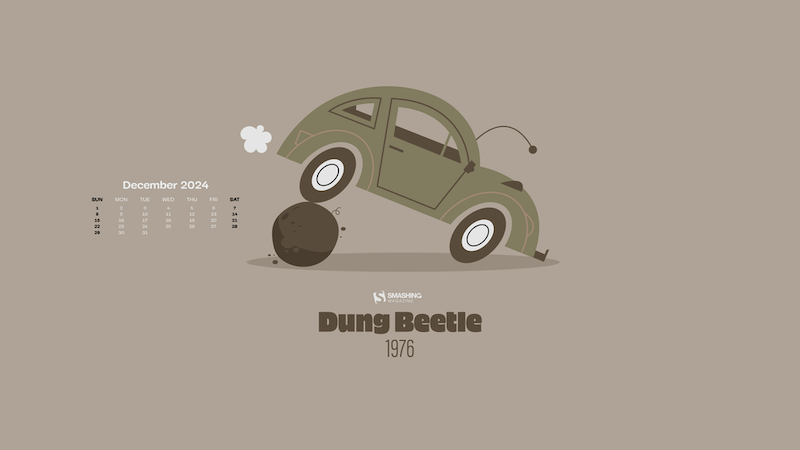

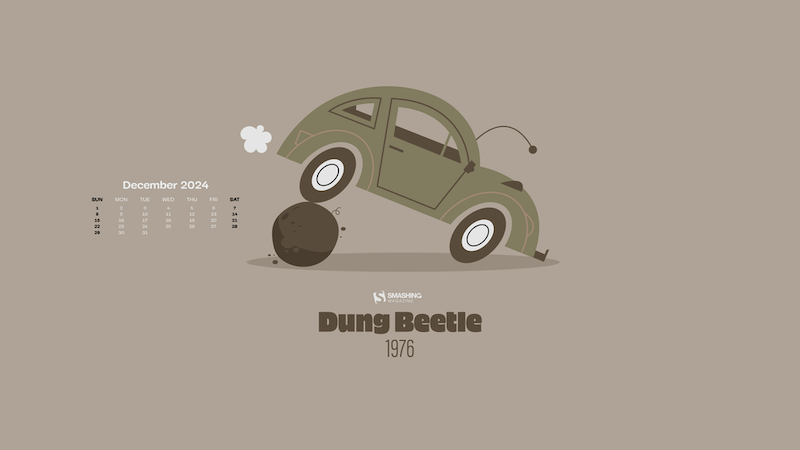

Dung Beetle

Designed by Ricardo Gimenes from Spain.

preview

with calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160

without calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160

Dear Moon, Merry Christmas

Designed by Vlad Gerasimov from Georgia.

preview

without calendar: 800×480, 800×600, 1024×600, 1024×768, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1440×960, 1600×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 2560×1600, 2880×1800, 3072×1920, 3840×2160, 5120×2880

Cardinals In Snowfall

“During Christmas season, in the cold, colorless days of winter, Cardinal birds are seen as symbols of faith and warmth. In the part of America I live in, there is snowfall every December. While the snow is falling, I can see gorgeous Cardinals flying in and out of my patio. The intriguing color palette of the bright red of the Cardinals, the white of the flurries and the brown/black of dry twigs and fallen leaves on the snow-laden ground fascinates me a lot, and inspired me to create this quaint and sweet, hand-illustrated surface pattern design as I wait for the snowfall in my town!” — Designed by Gyaneshwari Dave from the United States.

preview

without calendar: 640×960, 768×1024, 1280×720, 1280×1024, 1366×768, 1920×1080, 2560×1440

The House On The River Drina

“Since we often yearn for a peaceful and quiet place to work, we have found inspiration in the famous house on the River Drina in Bajina Bašta, Serbia. Wouldn’t it be great being in nature, away from civilization, swaying in the wind and listening to the waves of the river smashing your house, having no neighbors to bother you? Not sure about the Internet, though…” — Designed by PopArt Studio from Serbia.

preview

without calendar: 640×480, 800×600, 1024×1024, 1152×864, 1280×720, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

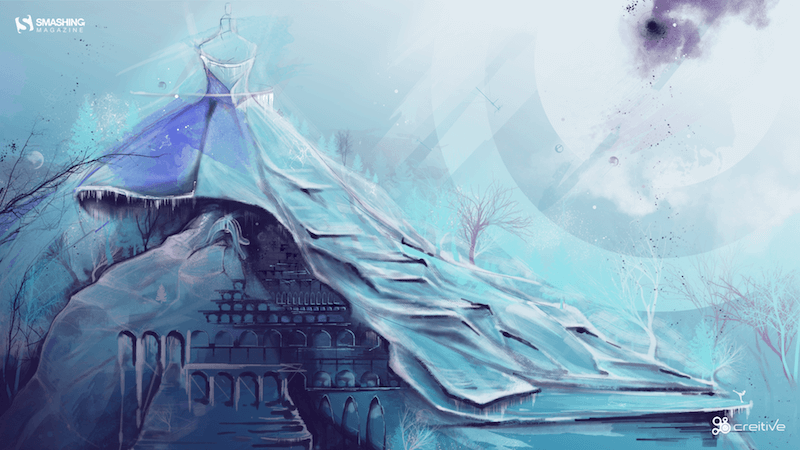

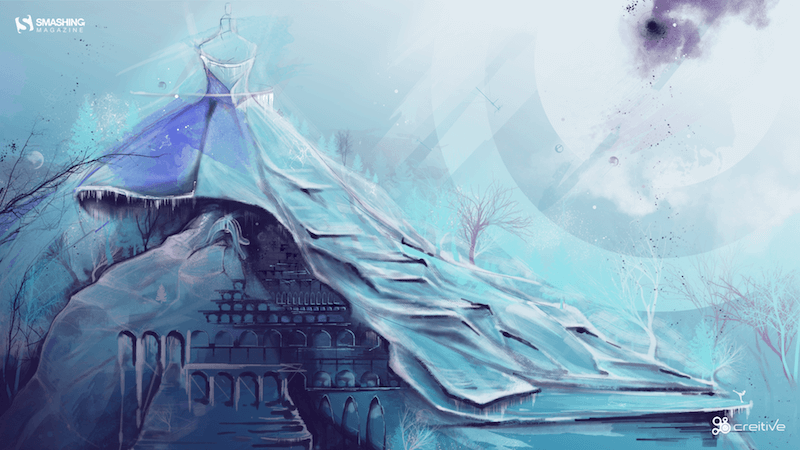

Enchanted Blizzard

“A seemingly forgotten world under the shade of winter glaze hides a moment where architecture meets fashion and change encounters steadiness.” — Designed by Ana Masnikosa from Belgrade, Serbia.

preview

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

Christmas Cookies

“Christmas is coming and a great way to share our love is by baking cookies.” — Designed by Maria Keller from Mexico.

preview

without calendar: 320×480, 640×480, 640×1136, 750×1334, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1242×2208, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 2880×1800

Sweet Snowy Tenderness

“You know that warm feeling when you get to spend cold winter days in a snug, homey, relaxed atmosphere? Oh, yes, we love it, too! It is the sentiment we set our hearts on for the holiday season, and this sweet snowy tenderness is for all of us who adore watching the snowfall from our windows. Isn’t it romantic?” — Designed by PopArt Studio from Serbia.

preview

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

Getting Hygge

“There’s no more special time for a fire than in the winter. Cozy blankets, warm beverages, and good company can make all the difference when the sun goes down. We’re all looking forward to generating some hygge this winter, so snuggle up and make some memories.” — Designed by The Hannon Group from Washington D.C.

preview

without calendar: 320×480, 640×480, 800×600, 1024×768, 1280×960, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1440, 2560×1440

Anonymoose

Designed by Ricardo Gimenes from Spain.

preview

without calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160

Joy To The World

“Joy to the world, all the boys and girls now, joy to the fishes in the deep blue sea, joy to you and me.” — Designed by Morgan Newnham from Boulder, Colorado.

preview

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

King Of Pop

Designed by Ricardo Gimenes from Spain.

preview

without calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160

Christmas Woodland

Designed by Mel Armstrong from Australia.

preview

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

Catch Your Perfect Snowflake

“This time of year, people tend to dream big and expect miracles. Let your dreams come true!” Designed by Igor Izhik from Canada.

preview

without calendar: 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 2560×1600

Trailer Santa

“A mid-century modern Christmas scene outside the norm of snowflakes and winter landscapes.” Designed by Houndstooth from the United States.

preview

without calendar: 1024×1024, 1280×800, 1280×1024, 1440×900, 1680×1050, 2560×1440

Silver Winter

Designed by Violeta Dabija from Moldova.

preview

without calendar: 1024×768, 1280×800, 1440×900, 1680×1050, 1920×1200, 2560×1440

Gifts Lover

Designed by Elise Vanoorbeek from Belgium.

preview

without calendar: 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

On To The Next One

“Endings intertwined with new beginnings, challenges we rose to and the ones we weren’t up to, dreams fulfilled and opportunities missed. The year we say goodbye to leaves a bitter-sweet taste, but we’re thankful for the lessons, friendships, and experiences it gave us. We look forward to seeing what the new year has in store, but, whatever comes, we will welcome it with a smile, vigor, and zeal.” — Designed by PopArt Studio from Serbia.

preview

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

The Matterhorn

“Christmas is always such a magical time of year so we created this wallpaper to blend the majestry of the mountains with a little bit of magic.” — Designed by Dominic Leonard from the United Kingdom.

preview

without calendar: 320×480, 800×600, 1024×768, 1280×720, 1400×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440

Ninja Santa

Designed by Elise Vanoorbeek from Belgium.

preview

without calendar: 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1440, 2560×1440

It’s In The Little Things

Designed by Thaïs Lenglez from Belgium.

preview

without calendar: 640×480, 800×600, 1024×768, 1280×1024, 1440×900, 1600×1200, 1680×1050, 1920×1080, 1920×1200, 2560×1440

Ice Flowers

“I took some photos during a very frosty and cold week before Christmas.” Designed by Anca Varsandan from Romania.

preview

without calendar: 1024×768, 1280×800, 1440×900, 1680×1050, 1920×1200

Surprise

“Surprise is the greatest gift which life can grant us.” — Designed by PlusCharts from India.

preview

without calendar: 360×640, 1024×768, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×900, 1680×1200, 1920×1080

Season’s Greetings From Australia

Designed by Tazi Designs from Australia.

preview

without calendar: 320×480, 640×480, 800×600, 1024×768, 1152×864, 1280×720, 1280×960, 1600×1200, 1920×1080, 1920×1440, 2560×1440

Christmas Selfie

Designed by Emanuela Carta from Italy.

preview

without calendar: 320×480, 800×600, 1280×800, 1280×1024, 1440×900, 1680×1050, 2560×1440

Winter Wonderland

“‘Winter is the time for comfort, for good food and warmth, for the touch of a friendly hand and for a talk beside the fire: it is the time for home.’ (Edith Sitwell) — Designed by Dipanjan Karmakar from India.

preview

without calendar: 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1440, 2560×1440

Winter Morning

“Early walks in the fields when the owls still sit on the fences and stare funny at you.” — Designed by Bo Dockx from Belgium.

preview

without calendar: 320×480, 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1920×1080, 1920×1200, 1920×1440, 2560×1440

Dream What You Want To Do

“The year will end, hope the last month, you can do what you want to do, seize the time, cherish yourself, expect next year we will be better!” — Designed by Hong Zi-Qing from Taiwan.

preview

without calendar: 1024×768, 1152×864, 1280×720, 1280×960, 1366×768, 1400×1050, 1530×900, 1600×1200, 1920×1080, 1920×1440, 2560×1440

Happy Holidays

Designed by Ricardo Gimenes from Spain.

preview

without calendar: 640×480, 800×480, 800×600, 1024×768, 1024×1024, 1152×864, 1280×720, 1280×800, 1280×960, 1280×1024, 1366×768, 1400×1050, 1440×900, 1600×1200, 1680×1050, 1680×1200, 1920×1080, 1920×1200, 1920×1440, 2560×1440, 3840×2160