Collective #818

Original Source: https://tympanus.net/codrops/collective/collective-818/

What is Utility-First CSS? * Hyperdiv * htmz * 404 Creatives

Original Source: https://tympanus.net/codrops/collective/collective-818/

What is Utility-First CSS? * Hyperdiv * htmz * 404 Creatives

Original Source: https://smashingmagazine.com/2024/03/modern-css-tooltips-speech-bubbles-part1/

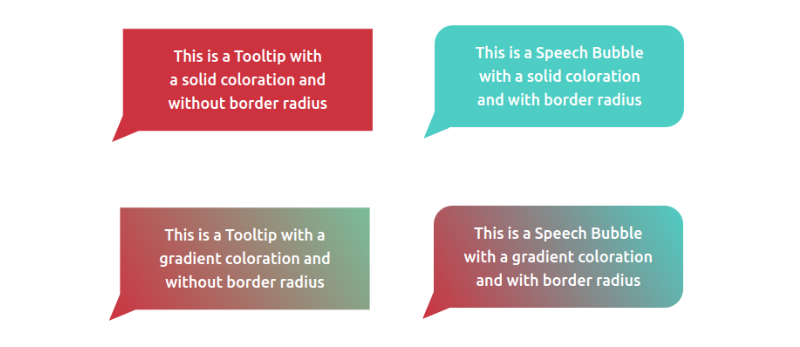

In a previous article, we explored ribbon shapes and different ways to approach them using clever combinations of CSS gradients and clip-path(). This time, I’d like to explore another shape, one that you’ve likely had to tackle at least once in your front-end life: tooltips. You know what we’re talking about, those little things that look like speech bubbles from comic books. They’re everywhere in the wild, from a hover effect for buttons to the text messaging app on your phone.

The shapes may look easy to make in CSS at first glance, but it always ends with a lot of struggles. For example, how do you adjust the position of the tail to indicate whether the tooltip is coming from a left, right, or center position? There are plenty of considerations to take into account when making tooltips — including overflowage, collision detection, and semantics — but it’s the shape and direction of the tail that I want to focus on because I often see inflexible fixed units used to position them.

Forget what you already know about tooltips because in this article, we will start from zero, and you will learn how to build a tooltip with minimal markup powered by modern CSS that provides flexibility to configure the component by adjusting CSS variables. We are not going to build one or two shapes, but… 100 different shapes!

That may sound like we’re getting into a super-long article, but actually, we can easily get there by adjusting a few values. In the end, you will have a back pocket full of CSS tricks that can be combined to create any shape you want.

And guess what? I’ve already created an online collection of 100 different tooltip shapes where you can easily copy and paste the code for your own use, but stay with me. You’re going to want to know the secret to unlocking hundreds of possibilities with the least possible code.

We’ll start with the shapes themselves, discussing how we can cut out the bubble and tail by combining CSS gradients and clipping. Then, we’ll pick things back up in a second article dedicated to improving another common approach to tooltips using borders and custom shapes.

The HTML

We’re only working with a single element:

<div class=”tooltip”>Your text content goes here</div>

That’s the challenge: Create hundreds of tooltip variations in CSS with only a single element to hook into in the HTML.

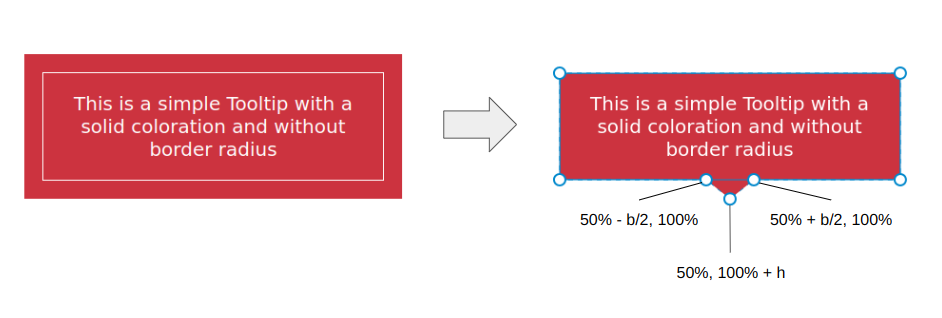

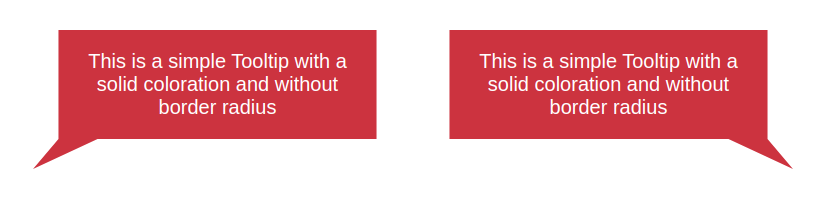

A Simple Tooltip Tail

I’m going to skip right over the basic rectangular shape; you know how to set a width and height (or aspect-ratio) on elements. Let’s start with the simplest shape for the tooltip’s tail, one that can be accomplished with only two CSS properties:

.tooltip {

/* tail dimension */

–b: 2em; /* base */

–h: 1em; /* height*/

border-image: fill 0 // var(–h)

conic-gradient(#CC333F 0 0); /* the color */

clip-path:

polygon(0 100%, 0 0, 100% 0, 100% 100%,

calc(50% + var(–b) / 2) 100%,

50% calc(100% + var(–h)),

calc(50% – var(–b) / 2) 100%);

}

The border-image property creates an “overflowing color” while clip-path defines the shape of the tooltip with polygon() coordinates. (Speaking of border-image, I wrote a deep-dive on it and explain how it might be the only CSS property that supports double slashes in the syntax!)

The tooltip’s tail is placed at the bottom center, and we have two variables to control its dimensions:

We can do the exact same thing in more intuitive ways, like defining a background and then border (or padding) to create space for the tail:

background: #CC333F;

border-bottom: var(–h) solid #0000;

…or using box-shadow (or outline) for the outside color:

background: #CC333F;

box-shadow: 0 0 0 var(–h) #CC333F;

While these approaches are indeed easier, they require an extra declaration compared to the single border-image declaration we used. Plus, we’ll see later that border-image is really useful for accomplishing more complex shapes.

Here is a demo with the different directions so you can see how easy it is to adjust the above code to change the tail’s position.

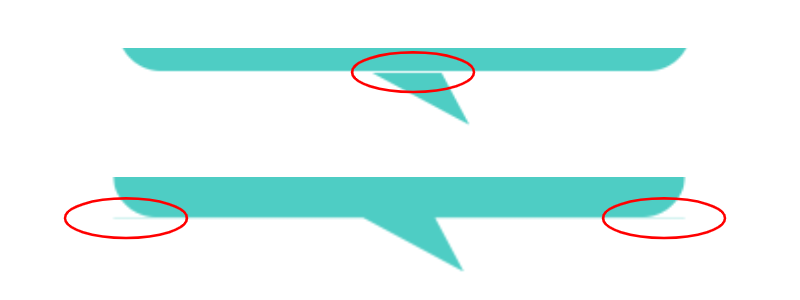

We can fix this by setting limits to some values so the tail never falls outside the container. Two points of the polygon are concerned with the fix.

This:

calc(var(–p) + var(–b) / 2) 100%

…and this:

calc(var(–p) – var(–b) / 2) 100%

The first calc() needs to be clamped to 100% to avoid the overflow from the right side, and the second one needs to be clamped to 0% to avoid the overflow from the left side. We can use the min() and max() functions to establish the range limits:

clip-path:

polygon(0 100%, 0 0, 100% 0, 100% 100%,

min(100%, var(–p) + var(–b) / 2) 100%,

var(–p) calc(100% + var(–h)),

max(0%, var(–p) – var(–b) / 2) 100%);

Adjusting The Tail Shape

Let’s integrate another variable, –x, into the clip-path() and use it to adjust the shape of the tail:

.tooltip {

/* tail dimension */

–b: 2em; /* base */

–h: 1em; /* height*/

–p: 50%; /* tail position */

–x: -2em; /* tail shape */

border-image: fill 0 // 9999px

conic-gradient(#CC333F 0 0); /* the color */

clip-path:

polygon(0 100%, 0 0, 100% 0, 100% 100%,

min(100%, var(–p) + var(–b) / 2) 100%,

calc(var(–p) + var(–x)) calc(100% + var(–h)),

max(0%, var(–p) – var(–b) / 2) 100%);

}

The –x variable can be either positive or negative (using whatever unit you want, including percentages). What we’re doing is adding the variable that establishes the tail’s shape, –x, to the tail’s position, –p. In other words, we’ve updated this:

var(–p) calc(100% + var(–h))

…to this:

calc(var(–p) + var(–x)) calc(100% + var(–h))

And here is the outcome:

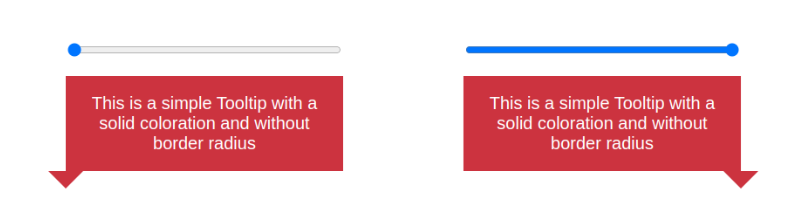

The tooltip’s tail points in either the right or left direction, depending on whether –x is a positive or negative value. Go ahead and use the range sliders in the following demo to see how the tooltip’s tail is re-positioned (–p) and re-shaped (–x) when adjusting two variables.

Note that I have updated the border-image outset to an impractically large value (9999px) instead of using the –h variable. The shape of the tail can be any type of triangle and can take a bigger area. Since there’s no way for us to know the exact value of the outset, we use that big value to make sure we have enough room to fill the tail in with color, no matter its shape.

Does the outset concept look strange to you? I know that working with border-image isn’t something many of us do all that often, so if this approach is tough to wrap your head around, definitely go check out my border-image article for a thorough demonstration of how it works.

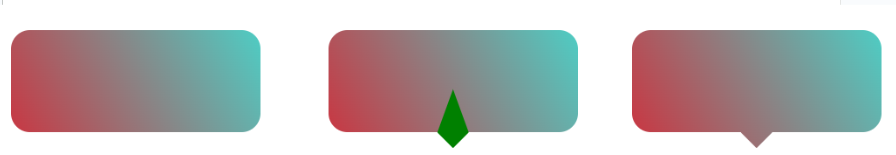

Working With Gradients

Most of the trouble starts when we want to color the tooltip with a gradient instead of a flat color. Applying one color is simple — even with older techniques — but when it comes to gradients, it’s not easy to make the tail color flow smoothly into the container’s color.

But guess what? That’s no problem for us because we are already using a gradient in our border-image declaration!

border-image: fill 0 // var(–h)

conic-gradient(#CC333F 0 0);

border-image only accepts gradients or images, so to produce a solid color, I had to use a gradient consisting of just one color. But if you change it into a “real” gradient that transitions between two or more colors, then you get your tooltip gradient. That’s all!

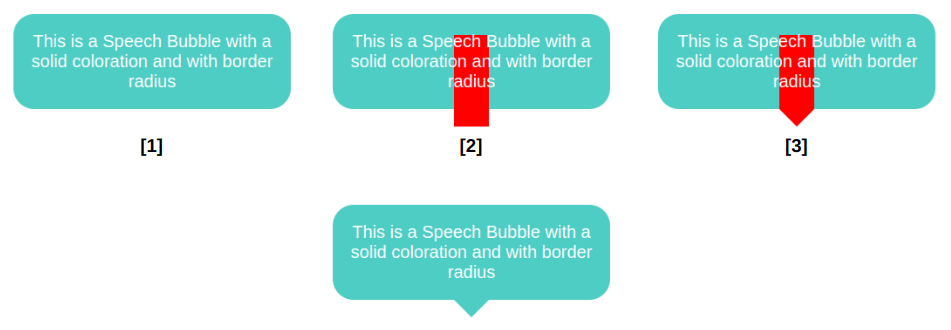

We start by declaring a background and border-radius on the .tooltip. Nothing fancy. Then, we move to the border-image property so that we can add a bar (highlighted in red in the last figure) that slightly overflows the container from the bottom. This part is a bit tricky, and here I invite you to read my previous article about border-image to understand this bit of CSS magic. From there, we add the clip-path and get our final shape.

.tooltip {

/* triangle dimension */

–b: 2em; /* base */

–h: 1em; /* height */

–p: 50%; /* position */

–r: 1.2em; /* the radius */

–c: #4ECDC4;

border-radius: var(–r);

clip-path: polygon(0 100%, 0 0, 100% 0, 100% 100%,

min(100%, var(–p) + var(–b) / 2) 100%,

var(–p) calc(100% + var(–h)),

max(0%, var(–p) – var(–b) / 2) 100%);

background: var(–c);

border-image: conic-gradient(var(–c) 0 0) fill 0/

var(–r) calc(100% – var(–p) – var(–b) / 2) 0 calc(var(–p) – var(–b) / 2)/

0 0 var(–h) 0;

}

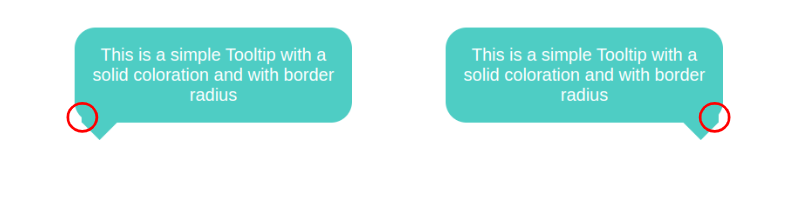

This visual glitch happens when the border-image overlaps with the rounded corners. To fix this, we need to adjust the border-radius value based on the tail’s position (–p).

We are not going to update all the radii, only the bottom ones and, more precisely, the horizontal values. I want to remind you that border-radius accepts up to eight values — each corner takes two values that set the horizontal and vertical directions — and in our case, we will update the horizontal value of the bottom-left and bottom-right corners:

border-radius:

/* horizontal values */

var(–r)

var(–r)

min(var(–r),100% – var(–p) – var(–b)/2) /* horizontal bottom-right */

min(var(–r),var(–p) – var(–b)/2) /* horizontal bottom-left */

/

/* vertical values */

var(–r)

var(–r)

var(–r)

var(–r)

All the corner values are equal to –r, except for the bottom-left and bottom-right corners. Notice the forward slash (/), as it is part of the syntax that separates the horizontal and vertical radii values.

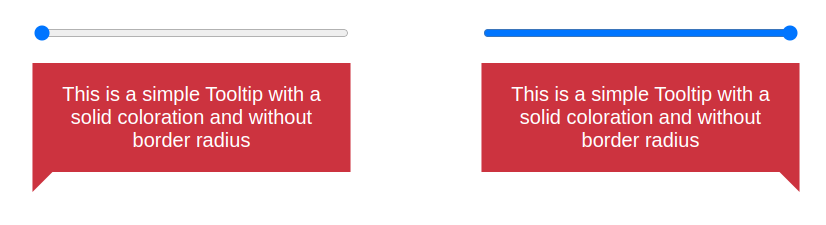

Now, let’s dig in and understand what is happening here. For the bottom-left corner, when the position of the tail is on the right, the position (–p) variable value will be big in order to keep the radius equal to the radius (–r), which serves as the minimum value. But when the position gets closer to the left, the value of –p decreases and, at some point, becomes smaller than the value of –r. The result is the value of the radius slowly decreasing until it reaches 0. It adjusts as the position updates!

I know that’s a lot to process, and a visual aid usually helps. Try slowly updating the tail’s position in the following demo to get a clearer picture of what’s happening.

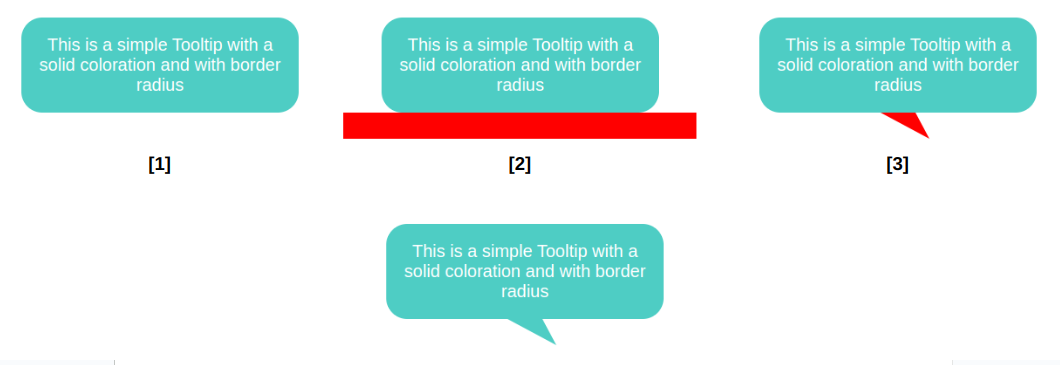

This time, the border image creates a horizontal bar along the bottom that is positioned directly under the element and extends outside of its boundary so that we have enough color for the tail when it’s closer to the edge.

.tooltip {

/* tail dimension */

–b: 2em; /* base */

–h: 1.5em; /* height */

–p: 50%; /* position */

–x: 1.8em; /* tail position */

–r: 1.2em; /* the radius */

–c: #4ECDC4;

border-radius: var(–r) var(–r) min(var(–r), 100% – var(–p) – var(–b) / 2) min(var(–r), var(–p) – var(–b) / 2) / var(–r);

clip-path: polygon(0 100%, 0 0, 100% 0, 100% 100%,

min(100%, var(–p) + var(–b) / 2) 100%,

calc(var(–p) + var(–x)) calc(100% + var(–h)),

max(0%, var(–p) – var(–b) / 2) 100%);

background: var(–c);

border-image: conic-gradient(var(–c) 0 0) 0 0 1 0 / 0 0 var(–h) 0 / 0 999px var(–h) 999px;

}

That’s why I do not use this approach when working with a simple isosceles triangle. This said, the method is perfectly fine, and in most cases, you may not see any visual glitches.

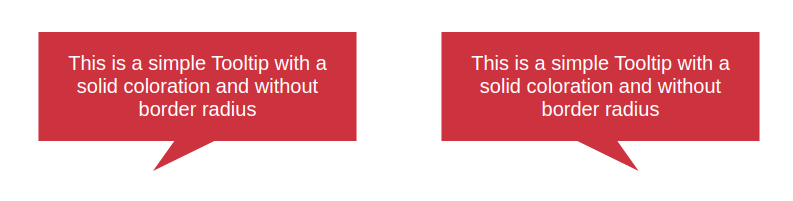

Putting Everything Together

We’ve looked at tooltips with tails that have equal sides, ones with tails that change shape, ones where the tail changes position and direction, ones with rounded corners, and ones that are filled in with gradients. What would it look like if we combined all of these examples into one mega-demo?

We can do it, but not by combining the approaches we’ve covered. We need another method, this time using a pseudo-element. No border-image for this one, I promise!

.tooltip {

/* triangle dimension */

–b: 2em; /* base */

–h: 1em; /* height */

–p: 50%; /* position */

–r: 1.2em; /* the radius */

border-radius: var(–r) var(–r) min(var(–r), 100% – var(–p) – var(–b) / 2) min(var(–r), var(–p) – var(–b) / 2) / var(–r);

background: 0 0 / 100% calc(100% + var(–h))

linear-gradient(60deg, #CC333F, #4ECDC4); /* the gradient */

position: relative;

z-index: 0;

}

.tooltip:before {

content: “”;

position: absolute;

z-index: -1;

inset: 0 0 calc(-1*var(–h));

background-image: inherit;

clip-path:

polygon(50% 50%,

min(100%, var(–p) + var(–b) / 2) calc(100% – var(–h)),

var(–p) 100%,

max(0%, var(–p) – var(–b) / 2) calc(100% – var(–h)));

}

The pseudo-element is used to create the tail at the bottom and notice how it inherits the gradient from the main element to simulate a continuous gradient that covers the entire shape.

Another important thing to note is the background-size declared in the .tooltip. The pseudo-element is covering a bigger area due to the negative bottom value, so we have to increase the height of the gradient so it covers the same area.

Can you figure it out? The code for all of them is included in my tooltip collection if you need a reference, but do try to make them yourself — it’s good exercise! Maybe you will find a different (or perhaps better) approach than mine.

Original Source: https://www.creativebloq.com/news/olly-gibbs-oscars-poster

Olly Gibbs does it again.

Original Source: https://www.creativebloq.com/news/iphone-16

Every rumour, all in one place.

Original Source: https://www.creativebloq.com/news/oompa-loompa-art

Her image will live on for eternity.

Original Source: https://www.sitepoint.com/next-js-internationalization/?utm_source=rss

Dive into internationalization (i18n) by exploring Next.js 14’s new features and how to build multilingual web experiences effortlessly.

Continue reading

Enhanced Internationalization (i18n) in Next.js 14

on SitePoint.

Original Source: https://abduzeedo.com/branding-and-visual-identity-insights-forte-whey-project

Branding and Visual Identity: Insights from Forte Whey Project

abduzeedo0227—24

Explore the intersection of branding and visual identity in the Forte Whey project, a case study in modern, health-focused brand creation.

In the competitive landscape of health and nutrition, the Forte Whey project by Daniel Medeiros stands as a testament to innovative branding. This project showcases how a brand can offer a compromise between health and taste, challenging the notion that nutritious snacks must sacrifice flavor for benefits. Forte Whey, designed for those committed to a healthy lifestyle without compromising on the joy of eating, embodies strength not just in physical form but as an attitude and determination.

The crux of developing Forte Whey’s brand identity was to navigate the common misconception that healthy snacks are dull. The challenge was to create a visual language that communicates modernity, passion, and innovation while distinguishing the brand in a crowded marketplace. The solution? A bold typographic logo that symbolizes strength and contemporary aesthetics, complemented by a vibrant color palette of neon, black, and beige. These elements work in harmony to not only promise a healthy lifestyle but also a bold statement against the mundane.

Forte Whey’s design strategy is a blend of art and science. The typography is not merely letters but a representation of the brand’s core values. The color scheme is carefully chosen to evoke a sense of innovation and boldness, appealing to consumers seeking both health benefits and taste. The cohesive design elements ensure the brand stands out, making a significant impact at the point of sale.

This project exemplifies how thoughtful design can transform brand perception, making it an excellent study for those interested in the intersection of branding and visual identity. By focusing on the essence of the brand and its promise to consumers, Daniel Medeiros has crafted a visual identity that truly differentiates Forte Whey in the market. This case study not only inspires designers but also brands looking to make a mark in their respective industries.

Branding and visual identity artifacts

For more information make sure to check out Daniel on Behance and LinkedIn.

Original Source: https://www.creativebloq.com/infographic/tools-2131971

The best free (and paid-for) infographic maker options.

Original Source: https://smashingmagazine.com/2024/02/reporting-core-web-vitals-performance-api/

This article is a sponsored by DebugBear

There’s quite a buzz in the performance community with the Interaction to Next Paint (INP) metric becoming an official Core Web Vitals (CWV) metric in a few short weeks. If you haven’t heard, INP is replacing the First Input Delay (FID) metric, something you can read all about here on Smashing Magazine as a guide to prepare for the change.

But that’s not what I really want to talk about. With performance at the forefront of my mind, I decided to head over to MDN for a fresh look at the Performance API. We can use it to report the load time of elements on the page, even going so far as to report on Core Web Vitals metrics in real time. Let’s look at a few ways we can use the API to report some CWV metrics.

Browser Support Warning

Before we get started, a quick word about browser support. The Performance API is huge in that it contains a lot of different interfaces, properties, and methods. While the majority of it is supported by all major browsers, Chromium-based browsers are the only ones that support all of the CWV properties. The only other is Firefox, which supports the First Contentful Paint (FCP) and Largest Contentful Paint (LCP) API properties.

So, we’re looking at a feature of features, as it were, where some are well-established, and others are still in the experimental phase. But as far as Core Web Vitals go, we’re going to want to work in Chrome for the most part as we go along.

First, We Need Data Access

There are two main ways to retrieve the performance metrics we care about:

Using the performance.getEntries() method, or

Using a PerformanceObserver instance.

Using a PerformanceObserver instance offers a few important advantages:

PerformanceObserver observes performance metrics and dispatches them over time. Instead, using performance.getEntries() will always return the entire list of entries since the performance metrics started being recorded.

PerformanceObserver dispatches the metrics asynchronously, which means they don’t have to block what the browser is doing.

The element performance metric type doesn’t work with the performance.getEntries() method anyway.

That all said, let’s create a PerformanceObserver:

const lcpObserver = new PerformanceObserver(list => {});

For now, we’re passing an empty callback function to the PerformanceObserver constructor. Later on, we’ll change it so that it actually does something with the observed performance metrics. For now, let’s start observing:

lcpObserver.observe({ type: “largest-contentful-paint”, buffered: true });

The first very important thing in that snippet is the buffered: true property. Setting this to true means that we not only get to observe performance metrics being dispatched after we start observing, but we also want to get the performance metrics that were queued by the browser before we started observing.

The second very important thing to note is that we’re working with the largest-contentful-paint property. That’s what’s cool about the Performance API: it can be used to measure very specific things but also supports properties that are mapped directly to CWV metrics. We’ll start with the LCP metric before looking at other CWV metrics.

Reporting The Largest Contentful Paint

The largest-contentful-paint property looks at everything on the page, identifying the biggest piece of content on the initial view and how long it takes to load. In other words, we’re observing the full page load and getting stats on the largest piece of content rendered in view.

We already have our Performance Observer and callback:

const lcpObserver = new PerformanceObserver(list => {});

lcpObserver.observe({ type: “largest-contentful-paint”, buffered: true });

Let’s fill in that empty callback so that it returns a list of entries once performance measurement starts:

// The Performance Observer

const lcpObserver = new PerformanceObserver(list => {

// Returns the entire list of entries

const entries = list.getEntries();

});

// Call the Observer

lcpObserver.observe({ type: “largest-contentful-paint”, buffered: true });

Next, we want to know which element is pegged as the LCP. It’s worth noting that the element representing the LCP is always the last element in the ordered list of entries. So, we can look at the list of returned entries and return the last one:

// The Performance Observer

const lcpObserver = new PerformanceObserver(list => {

// Returns the entire list of entries

const entries = list.getEntries();

// The element representing the LCP

const el = entries[entries.length – 1];

});

// Call the Observer

lcpObserver.observe({ type: “largest-contentful-paint”, buffered: true });

The last thing is to display the results! We could create some sort of dashboard UI that consumes all the data and renders it in an aesthetically pleasing way. Let’s simply log the results to the console rather than switch gears.

// The Performance Observer

const lcpObserver = new PerformanceObserver(list => {

// Returns the entire list of entries

const entries = list.getEntries();

// The element representing the LCP

const el = entries[entries.length – 1];

// Log the results in the console

console.log(el.element);

});

// Call the Observer

lcpObserver.observe({ type: “largest-contentful-paint”, buffered: true });

There we go!

It’s certainly nice knowing which element is the largest. But I’d like to know more about it, say, how long it took for the LCP to render:

// The Performance Observer

const lcpObserver = new PerformanceObserver(list => {

const entries = list.getEntries();

const lcp = entries[entries.length – 1];

entries.forEach(entry => {

// Log the results in the console

console.log(

The LCP is:,

lcp.element,

The time to render was ${entry.startTime} milliseconds.,

);

});

});

// Call the Observer

lcpObserver.observe({ type: “largest-contentful-paint”, buffered: true });

// The LCP is:

// <h2 class=”author-post__title mt-5 text-5xl”>…</h2>

// The time to render was 832.6999999880791 milliseconds.

Reporting First Contentful Paint

This is all about the time it takes for the very first piece of DOM to get painted on the screen. Faster is better, of course, but the way Lighthouse reports it, a “passing” score comes in between 0 and 1.8 seconds.

Just like we set the type property to largest-contentful-paint to fetch performance data in the last section, we’re going to set a different type this time around: paint.

When we call paint, we tap into the PerformancePaintTiming interface that opens up reporting on first paint and first contentful paint.

// The Performance Observer

const paintObserver = new PerformanceObserver(list => {

const entries = list.getEntries();

entries.forEach(entry => {

// Log the results in the console.

console.log(

The time to ${entry.name} took ${entry.startTime} milliseconds.,

);

});

});

// Call the Observer.

paintObserver.observe({ type: “paint”, buffered: true });

// The time to first-paint took 509.29999999981374 milliseconds.

// The time to first-contentful-paint took 509.29999999981374 milliseconds.

Notice how paint spits out two results: one for the first-paint and the other for the first-contenful-paint. I know that a lot happens between the time a user navigates to a page and stuff starts painting, but I didn’t know there was a difference between these two metrics.

Here’s how the spec explains it:

“The primary difference between the two metrics is that [First Paint] marks the first time the browser renders anything for a given document. By contrast, [First Contentful Paint] marks the time when the browser renders the first bit of image or text content from the DOM.”

As it turns out, the first paint and FCP data I got back in that last example are identical. Since first paint can be anything that prevents a blank screen, e.g., a background color, I think that the identical results mean that whatever content is first painted to the screen just so happens to also be the first contentful paint.

But there’s apparently a lot more nuance to it, as Chrome measures FCP differently based on what version of the browser is in use. Google keeps a full record of the changelog for reference, so that’s something to keep in mind when evaluating results, especially if you find yourself with different results from others on your team.

Reporting Cumulative Layout Shift

How much does the page shift around as elements are painted to it? Of course, we can get that from the Performance API! Instead of largest-contentful-paint or paint, now we’re turning to the layout-shift type.

This is where browser support is dicier than other performance metrics. The LayoutShift interface is still in “experimental” status at this time, with Chromium browsers being the sole group of supporters.

As it currently stands, LayoutShift opens up several pieces of information, including a value representing the amount of shifting, as well as the sources causing it to happen. More than that, we can tell if any user interactions took place that would affect the CLS value, such as zooming, changing browser size, or actions like keydown, pointerdown, and mousedown. This is the lastInputTime property, and there’s an accompanying hasRecentInput boolean that returns true if the lastInputTime is less than 500ms.

Got all that? We can use this to both see how much shifting takes place during page load and identify the culprits while excluding any shifts that are the result of user interactions.

const observer = new PerformanceObserver((list) => {

let cumulativeLayoutShift = 0;

list.getEntries().forEach((entry) => {

// Don’t count if the layout shift is a result of user interaction.

if (!entry.hadRecentInput) {

cumulativeLayoutShift += entry.value;

}

console.log({ entry, cumulativeLayoutShift });

});

});

// Call the Observer.

observer.observe({ type: “layout-shift”, buffered: true });

Given the experimental nature of this one, here’s what an entry object looks like when we query it:

Pretty handy, right? Not only are we able to see how much shifting takes place (0.128) and which element is moving around (article.a.main), but we have the exact coordinates of the element’s box from where it starts to where it ends.

Reporting Interaction To Next Paint

This is the new kid on the block that got my mind wondering about the Performance API in the first place. It’s been possible for some time now to measure INP as it transitions to replace First Input Delay as a Core Web Vitals metric in March 2024. When we’re talking about INP, we’re talking about measuring the time between a user interacting with the page and the page responding to that interaction.

We need to hook into the PerformanceEventTiming class for this one. And there’s so much we can dig into when it comes to user interactions. Think about it! There’s what type of event happened (entryType and name), when it happened (startTime), what element triggered the interaction (interactionId, experimental), and when processing the interaction starts (processingStart) and ends (processingEnd). There’s also a way to exclude interactions that can be canceled by the user (cancelable).

const observer = new PerformanceObserver((list) => {

list.getEntries().forEach((entry) => {

// Alias for the total duration.

const duration = entry.duration;

// Calculate the time before processing starts.

const delay = entry.processingStart – entry.startTime;

// Calculate the time to process the interaction.

const lag = entry.processingStart – entry.startTime;

// Don’t count interactions that the user can cancel.

if (!entry.cancelable) {

console.log(`INP Duration: ${duration}`);

console.log(`INP Delay: ${delay}`);

console.log(`Event handler duration: ${lag}`);

}

});

});

// Call the Observer.

observer.observe({ type: “event”, buffered: true });

Reporting Long Animation Frames (LoAFs)

Let’s build off that last one. We can now track INP scores on our website and break them down into specific components. But what code is actually running and causing those delays?

The Long Animation Frames API was developed to help answer that question. It won’t land in Chrome stable until mid-March 2024, but you can already use it in Chrome Canary.

A long-animation-frame entry is reported every time the browser couldn’t render page content immediately as it was busy with other processing tasks. We get an overall duration for the long frame but also a duration for different scripts involved in the processing.

const observer = new PerformanceObserver((list) => {

list.getEntries().forEach((entry) => {

if (entry.duration > 50) {

// Log the overall duration of the long frame.

console.log(Frame took ${entry.duration} ms)

console.log(Contributing scripts:)

// Log information on each script in a table.

entry.scripts.forEach(script => {

console.table({

// URL of the script where the processing starts

sourceURL: script.sourceURL,

// Total time spent on this sub-task

duration: script.duration,

// Name of the handler function

functionName: script.sourceFunctionName,

// Why was the handler function called? For example,

// a user interaction or a fetch response arriving.

invoker: script.invoker

})

})

}

});

});

// Call the Observer.

observer.observe({ type: “long-animation-frame”, buffered: true });

When an INP interaction takes place, we can find the closest long animation frame and investigate what processing delayed the page response.

There’s A Package For This

The Performance API is so big and so powerful. We could easily spend an entire bootcamp learning all of the interfaces and what they provide. There’s network timing, navigation timing, resource timing, and plenty of custom reporting features available on top of the Core Web Vitals we’ve looked at.

If CWVs are what you’re really after, then you might consider looking into the web-vitals library to wrap around the browser Performance APIs.

Need a CWV metric? All it takes is a single function.

webVitals.getINP(function(info) {

console.log(info)

}, { reportAllChanges: true });

Boom! That reportAllChanges property? That’s a way of saying we only want to report data every time the metric changes instead of only when the metric reaches its final value. For example, as long as the page is open, there’s always a chance that the user will encounter an even slower interaction than the current INP interaction. So, without reportAllChanges, we’d only see the INP reported when the page is closed (or when it’s hidden, e.g., if the user switches to a different browser tab).

We can also report purely on the difference between the preliminary results and the resulting changes. From the web-vitals docs:

function logDelta({ name, id, delta }) {

console.log(`${name} matching ID ${id} changed by ${delta}`);

}

onCLS(logDelta);

onINP(logDelta);

onLCP(logDelta);

Measuring Is Fun, But Monitoring Is Better

All we’ve done here is scratch the surface of the Performance API as far as programmatically reporting Core Web Vitals metrics. It’s fun to play with things like this. There’s even a slight feeling of power in being able to tap into this information on demand.

At the end of the day, though, you’re probably just as interested in monitoring performance as you are in measuring it. We could do a deep dive and detail what a performance dashboard powered by the Performance API is like, complete with historical records that indicate changes over time. That’s ultimately the sort of thing we can build on this — we can build our own real user monitoring (RUM) tool or perhaps compare Performance API values against historical data from the Chrome User Experience Report (CrUX).

Or perhaps you want a solution right now without stitching things together. That’s what you’ll get from a paid commercial service like DebugBear. All of this is already baked right in with all the metrics, historical data, and charts you need to gain insights into the overall performance of a site over time… and in real-time, monitoring real users.

DebugBear can help you identify why users are having slow experiences on any given page. If there is slow INP, what page elements are these users interacting with? What elements often shift around on the page and cause high CLS? Is the LCP typically an image, a heading, or something else? And does the type of LCP element impact the LCP score?

To help explain INP scores, DebugBear also supports the upcoming Long Animation Frames API we looked at, allowing you to see what code is responsible for interaction delays.

The Performance API can also report a list of all resource requests on a page. DebugBear uses this information to show a request waterfall chart that tells you not just when different resources are loaded but also whether the resources were render-blocking, loaded from the cache or whether an image resource is used for the LCP element.

In this screenshot, the blue line shows the FCP, and the red line shows the LCP. We can see that the LCP happens right after the LCP image request, marked by the blue “LCP” badge, has finished.

DebugBear offers a 14-day free trial. See how fast your website is, what’s slowing it down, and how you can improve your Core Web Vitals. You’ll also get monitoring alerts, so if there’s a web vitals regression, you’ll find out before it starts impacting Google search results.

Original Source: https://www.sitepoint.com/elevate-your-online-store-with-top-woocommerce-plugins/?utm_source=rss

Our streamlined guide compares crucial features, usability, and value-for-money to help you choose the plugins that fit your specific needs.

Continue reading

Elevate Your Online Store with Top WooCommerce Plugins

on SitePoint.