Original Source: https://www.sitepoint.com/build-a-simple-api-service-with-express-and-graphql/

This article was originally published on the Okta developer blog. Thank you for supporting the partners who make SitePoint possible.

GraphQL has become an immensely popular alternative to REST APIs. The flexibility you get from using GraphQL makes it easier for developers to get any information they need for an app, and just the information they need for that portion of the app. That gives you the feel of a very customized API and can help cut down on bandwidth.

In this tutorial, I’ll show you how to write a custom GraphQL API using Node and Express. I’ll also show you how to secure parts of the API while making other parts open to the public.

Create the GraphQL API with Express

To create the API, start by creating a new folder and creating a package.json file to manage your dependencies. You’ll also need to install a few dependencies to get GraphQL with Express up and running:

mkdir graphql-express

cd graphql-express

npm init -y

npm install express@2.8.4 express-graphql@0.6.12 graphql@14.0.2 graphql-tag@2.9.2 cors@2.8.4

Now create a file named index.js. This will be your main entry point:

const express = require(‘express’)

const cors = require(‘cors’)

const graphqlHTTP = require(‘express-graphql’)

const gql = require(‘graphql-tag’)

const { buildASTSchema } = require(‘graphql’)

const app = express()

app.use(cors())

const schema = buildASTSchema(gql`

type Query {

hello: String

}

`)

const rootValue = {

hello: () => ‘Hello, world’

}

app.use(‘/graphql’, graphqlHTTP({ schema, rootValue }))

const port = process.env.PORT || 4000

app.listen(port)

console.log(`Running a GraphQL API server at localhost:${port}/graphql`)

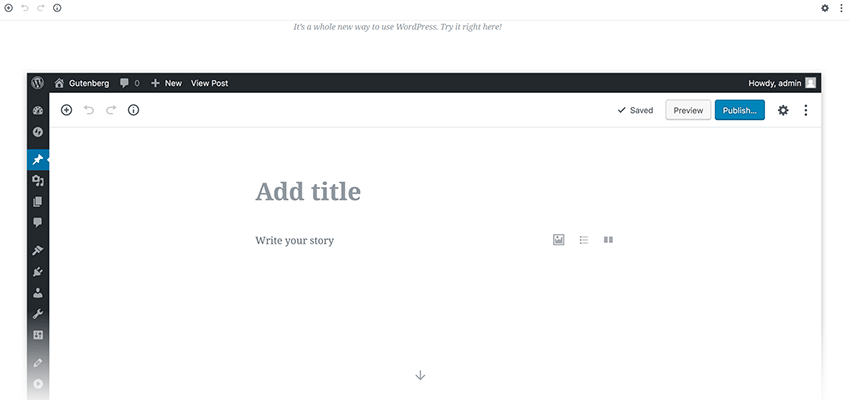

This is about as simple as a GraphQL server gets. All this does is return “Hello, world” when you query “hello”, but it’s a start. To take it for a test spin, run node ., then in another tab open your browser to the GraphQL Playground. Once there, enter http://localhost:4000/graphql to access your GraphQL server.

The GraphQL Playground will help explore your schema and test out queries. It even automatically creates some documentation for you.

Try querying for hello using the following query:

query {

hello

}

Improve Your GraphQL Developer Experience

Here are a couple quick tips to help make your development experience a little better:

1. Install a linter to help catch bugs in your editor. This will help keep your styling consistent and catch any easily-avoidable bugs.

To install StandardJS, type npm install –save-dev standard@12.0.1. Most editors will be able to show you warnings and errors as you type.

You can also edit the scripts object of your package.json so that you can run the linter at any time with npm test:

“scripts”: {

“test”: “standard”

},

2. Automatically restart the server when you make changes.

Install nodemon with npm install –save-dev nodemon@1.18.4.

Add another script to package.json, so you can run the server with npm start. Combined with the above, your scripts object should look like this:

“scripts”: {

“test”: “standard”,

“start”: “nodemon .”

},

Go ahead and close the server you had run with node . and now type npm start to restart the development server. From now on, any changes you make will automatically restart the server.

Create the GraphQL Queries

To get something a little more useful, let’s make a post editor. GraphQL is strongly typed, allowing you to create a type for each object and connect them. A common scenario might be to have a post with some text, that was written by a person. Update your schema to include these types. You can also update your Query type to utilize these new types.

type Query {

posts: [Post]

post(id: ID): Post

authors: [Person]

author(id: ID): Person

}

type Post {

id: ID

author: Person

body: String

}

type Person {

id: ID

posts: [Post]

firstName: String

lastName: String

}

Even though the resolvers aren’t set up, you can already go back to GraphQL Playground and refresh the schema by clicking the circular arrow icon next to the localhost URL.

The schema explorer is really useful for figuring out how to create your query. Click the green SCHEMA button to check out your new schema.

You’ll need some way to store the data. To keep it simple, use JavaScript’s Map object for in-memory storage. You can also create some classes that will help connect the data from one object to another.

const PEOPLE = new Map()

const POSTS = new Map()

class Post {

constructor (data) { Object.assign(this, data) }

get author () {

return PEOPLE.get(this.authorId)

}

}

class Person {

constructor (data) { Object.assign(this, data) }

get posts () {

return […POSTS.values()].filter(post => post.authorId === this.id)

}

}

Now if you have an instance of a Person, you can find all of their posts by simply asking for person.posts. Since GraphQL lets you only ask for the data you want, the posts getter will never get called unless you ask for it, which could speed up the query if that’s an expensive operation.

You’ll also need to update your resolvers (the functions in rootValue) in order to accommodate these new types.

const rootValue = {

posts: () => POSTS.values(),

post: ({ id }) => POSTS.get(id),

authors: () => PEOPLE.values(),

author: ({ id }) => PEOPLE.get(id)

}

This is great, but there’s no data yet. For now, stub in some fake data. You can add this function and the call to it right after the assignment to rootValue.

const initializeData = () => {

const fakePeople = [

{ id: ‘1’, firstName: ‘John’, lastName: ‘Doe’ },

{ id: ‘2’, firstName: ‘Jane’, lastName: ‘Doe’ }

]

fakePeople.forEach(person => PEOPLE.set(person.id, new Person(person)))

const fakePosts = [

{ id: ‘1’, authorId: ‘1’, body: ‘Hello world’ },

{ id: ‘2’, authorId: ‘2’, body: ‘Hi, planet!’ }

]

fakePosts.forEach(post => POSTS.set(post.id, new Post(post)))

}

initializeData()

Now that you have your queries all set up and some data stubbed in, go back to GraphQL Playground and play around a bit. Try getting all the posts, or get all the authors and posts associated with each one.

Or get weird and get a single post by id, then the author for that post, and all of that author’s posts (including the one you just queried).

Add User Authentication to Your Express + GraphQL API

One simple way to add authentication to your project is with Okta. Okta is a cloud service that allows developers to create, edit, and securely store user accounts and user account data, and connect them with one or multiple applications. If you don’t already have one, sign up for a forever-free developer account.

You’re going to need to save some information to use in the app. Create a new file named .env. In it, enter in your organization URL.

HOST_URL=http://localhost:4000

OKTA_ORG_URL=https://{yourOktaOrgUrl}

You will also need a random string to use as an App Secret for sessions. You can generate this with the following command:

echo “APP_SECRET=`openssl rand -base64 32`” >> .env

Next, log in to your developer console, navigate to Applications, then click Add Application. Select Web, then click Next.

The page you come to after creating an application has some more information you need to save to your .env file. Copy in the client ID and client secret.

OKTA_CLIENT_ID={yourClientId}

OKTA_CLIENT_SECRET={yourClientSecret}

The last piece of information you need from Okta is an API token. In your developer console, navigate to API -> Tokens, then click on Create Token. You can have many tokens, so just give this one a name that reminds you what it’s for, like “GraphQL Express”. You’ll be given a token that you can only see right now. If you lose the token, you’ll have to create another one. Add this to .env also.

OKTA_TOKEN={yourOktaAPIToken}

Create a new file named okta.js. This is where you’ll create some utility functions, as well as get the app initialized for Okta. When authenticated through Okta, your app will authenticate through an access token using JWT. You can use this to determine who a user is. To avoid dealing directly with authentication in your app, a user would sign in on Okta’s servers, then send you a JWT that you can verify.

okta.js

const session = require(‘express-session’)

const OktaJwtVerifier = require(‘@okta/jwt-verifier’)

const verifier = new OktaJwtVerifier({

clientId: process.env.OKTA_CLIENT_ID,

issuer: `${process.env.OKTA_ORG_URL}/oauth2/default`

})

const { Client } = require(‘@okta/okta-sdk-nodejs’)

const client = new Client({

orgUrl: process.env.OKTA_ORG_URL,

token: process.env.OKTA_TOKEN

})

const { ExpressOIDC } = require(‘@okta/oidc-middleware’)

const oidc = new ExpressOIDC({

issuer: `${process.env.OKTA_ORG_URL}/oauth2/default`,

client_id: process.env.OKTA_CLIENT_ID,

client_secret: process.env.OKTA_CLIENT_SECRET,

redirect_uri: `${process.env.HOST_URL}/authorization-code/callback`,

scope: ‘openid profile’

})

const initializeApp = (app) => {

app.use(session({

secret: process.env.APP_SECRET,

resave: true,

saveUninitialized: false

}))

app.use(oidc.router)

app.use(‘/access-token’, oidc.ensureAuthenticated(), async (req, res, next) => {

res.send(req.userContext.tokens.access_token)

})

}

module.exports = { client, verifier, initializeApp }

The initializeApp function adds some middleware to allow you to log in with Okta. Whenever you go to the http://localhost:4000/access-token, it will first check that you’re logged in. If you aren’t, it will first send you to Okta’s servers to authenticate. Once authentication is successful, it returns you to the /access-token route and will print out your current access token, which will be valid for about an hour.

The client that you’re exporting allows you to run some administrative calls on your server. You’ll be using it later to get more information about a user based on their ID.

the verifier is what you use to verify that a JWT is valid, and it gives you some basic information about a user, like their user ID and email address.

Now, in index.js, you’ll need to import this file and call the initializeApp function. You also need to use a tool called dotenv that will read your .env file and add the variables to process.env. At the very top of the file, add the following line:

require(‘dotenv’).config({ path: ‘.env’ })

Just after the app.use(cors()) line, add the following:

const okta = require(‘./okta’)

okta.initializeApp(app)

To make this all work, you’ll also need to install a few new dependencies:

npm i @okta/jwt-verifier@0.0.12 @okta/oidc-middleware@1.0.0 @okta/oidc-sdk-nodejs@1.2.0 dotenv@6.0.0 express-session@1.15.6

You should now be able to go to http://localhost:4000/access-token to log in and get an access token. If you were just at your developer console, you’ll probably find you’re already logged in. You can log out of your developer console to ensure the flow works properly.

Create GraphQL Mutations

Now it’s time to use real data. There may be some real John and Jane Does out there, but chances are they don’t have an account on your application yet. Next, I’ll show you how to add some mutations that will use your current user to create, edit, or delete a post.

To generate IDs for a post, you can use uuid. Install it with npm install uuid@3.3.2, then add it to index.js with:

const uuid = require(‘uuid/v4’)

That should go near the top of the file, next to the other require statements.

While still in index.js, add the following types to your schema:

The post Build a Simple API Service with Express and GraphQL appeared first on SitePoint.

The competition in the e-commerce market is fiercer than ever, as brands wrangle to outdo rivals by deploying the latest techniques and practices technology can offer. However, it’s hard to predict an industry-leader for a longer duration with the future of e-commerce constantly shifting.

The competition in the e-commerce market is fiercer than ever, as brands wrangle to outdo rivals by deploying the latest techniques and practices technology can offer. However, it’s hard to predict an industry-leader for a longer duration with the future of e-commerce constantly shifting.